I just installed RT 5.8 on WIN 10. I have 24MP RAWs from D750 (which is great btw!). Since I dont have 8K screen, but only 4K, I can only view part of the file in 100% view (for denoise, sharpening). If I drag the viewing section window around, RT seems to recalculate every part of the file every time, even though it has calculated the same part of the RAW file just 10 seconds before. If it could cache the active view, this would greatly benefit the experience. Even better: use the time when I am looking and start to process the not shown parts of the file during that time instead leaving the CPU idle! Why not? On my i7-8700K (32GB DDR4), 100% preview on 4K screen is slow (My next machine will be R9-3950X or similar, standard i7 seems generally too slow for me…)

Hello & welcome! Most of us don’t have hardware this nice! I think it in s generally accepted workflow to leave denoise until the end ![]()

This is what Filmulator does, it computes a small preview resolution image first and then does the full-res image so you get 60fps zooming and panning on the full-size image even on a 4K monitor.

It also enables instant exports since all of the data is already calculated.

I’ve always wondered why other photo editors don’t do this.

This is what most commercial software does as well. I know at least Capture One, Lightroom, and ACR all cache adjustments. This method really shines with some newer cameras that are really pushing resolution like the 60 mp - 100+mp cameras. Most individuals do not use these so as long as you have decent hardware it tends to not be a major issue but it could indeed speed things up not having to compute the entire pipeline every adjustment.

Despite this it is still a good practice to denoise last due to some adjustments introducing noise to begin with.

There is already some caching involved in RawTherapee if I’m not mistaken. The problem for more advanced caching may be memory constraints. It’s also a matter of what to cache and when. If you have a complicated history of edits, do you want to cache all intermediates? Only the last fives? What would you expect as a user?

This has been a constant debate over the years, and to my knowledge never has there been a winner

But in RT you can denoise near the end of the pipeline with the Wavelet Levels tool. Documentation will be updated soon

This has been a constant debate over the years

Same could be said about caching, which has been so aggressive in PS / LR that people have had to turn it down. Besides the GTK3 kerfuffle, RT has always been zippy.

RT already caches some data:

- the whole decoded raw file is cached (takes width * height * 4 bytes)

- the demosaiced raw file is cached (takes 3 * width * height * 4 bytes)

- the result of Capture Sharpening is cached (takes 3 * width * height * 4 bytes)

Means for a single 100 MP raw already ~2.8 GB of data are cached. What if you use METM, where you can open more than one editor…

The point on time-axis, when you enable denoise in RT, does not matter concerning the output. The result will be always the same, as denoise is executed at a fixed position in toolchain

I agree RT is very snappy. Very well engineered. Every since I moved to windows I have had no issues with performance like I had on Mac. I blame the underpowered hardware for the 4k screen the system had for that one.

I do see your point there I never work on more then one image at a time but I could but not everyone has 32gb of ram to play with. I also see no performance issues with RT to begin with I think part of what helps the most is certain things are only rendered under 1:1

This make sense I did not realize denoise was done early in the toolchain. I still tend to save it till near the end of my workflow as it makes the 1:1 renders faster to leave it off.

I never considered the scheme I use in rawproc to be “caching”, but when I read this thread it has some relevance. After opening a raw file, rawproc presents the user with a “best-effort” depiction of the raw data, and an empty toolchain. It is then up to the user to add tools in whatever order they deem prudent to make a proper rendition for display and export.

Each added tool has it’s own copy of the image, processed according to the tool’s parameters, starting with the image from the previous tool in the chain. If that tool is selected for display, that’s what is thrown upon the screen. For export, the result of the last tool in the chain is always used for producing the export rendition.

This approach eats a lot of memory, but it makes the result of the tool selected for display immediately available, well, subject to the display color and tone transform. I’ve got a bit of logic to manage the state of processing at each tool as other tools change their processing, with the objective of only having to re-process tools between the change and the display-selected tool.

So, each tool is really a cache of its processing. I definitely do not recommend this approach for other softwares, just laying it out for cogitation…

To give you perspective, my system is as low-spec as you can imagine. Forget 32GB RAM. I have 4GB (and had 2GB for the longest time). ![]()

Hint: detail windows are magic.

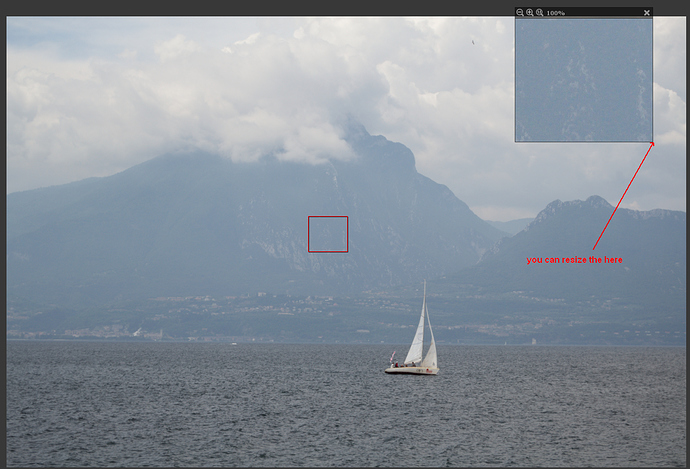

Yes they are I love those things I just wish you can alter the region size. Maybe you can I just have not figured it out yet.

This make sense.

Guys, I ve been using RT for a long time. The toolchain order is set by the software. You can only define parameters for the algorythms or dis / enable individual tools, but the profiles look same, no matter in what order you have used the tools. That is very wise.

I think people are missing the point.

The question is why does RawTherapee not cache the end result so you can pan and zoom freely without waiting for computation?

CarVac thanks that is the main question! End result cache would consume … let me think… 70MB of RAM or the like…

If I’m going on this report by @Hombre, currently only the previewed part is actually calculated. So naturally, the preview window needs to be recalculated each time you move it.

To change this behavior may be very useful, but also very complicated.

That’s interesting. I have quite a beefy computer and it would be nice to see more of the hardware being used. Although I am already quite satisfied with how it parallelizes on my cpu.