That’s nice! How did you implement it? I have been thinking that it had been real nice to just paint the adjustment ev value directly without a mask!

Internally, it’s a 50% grey surface, and you draw with a black and white pen. The surface itself is hidden, but passed to the shader as an exposure adjustment.

I’m still experimenting with different mappings of black-to-white, to exposure changes. Shifting the value range from 0-1 to 0.5-1.5, and raising it to some power seems to work well as an exposure multiplier. But I’ll play around with it a bit. Something log-scale (i.e. 0.5-2.0 raised to some power) might work better.

vkdt had that for some time too. works well with guided filters: vkdt: draw: raster masks as brush strokes and supports pressure if you have a pentablet.

Oh, that’s a great idea! Thank you!

Maybe try mapping your [0-1] to [-1, 1] and then use it AS the power.

With p for pixel value and s for strength, painted as you described with 0.5 as default value:

out = p * 2 ^ (2 * s - 1)

Then you get the log-behavior easily ![]() That is at least how I have been thinking about doing it! The maximum effect can easily be adjusted by either scaling the exponent range or base size.

That is at least how I have been thinking about doing it! The maximum effect can easily be adjusted by either scaling the exponent range or base size.

Good idea, thank you! I have very limited time to work on this, between the kids going to bed, and my own bedtime. The last session was spent mostly on the drawing and shader hookup. Making it actually usable will come next.

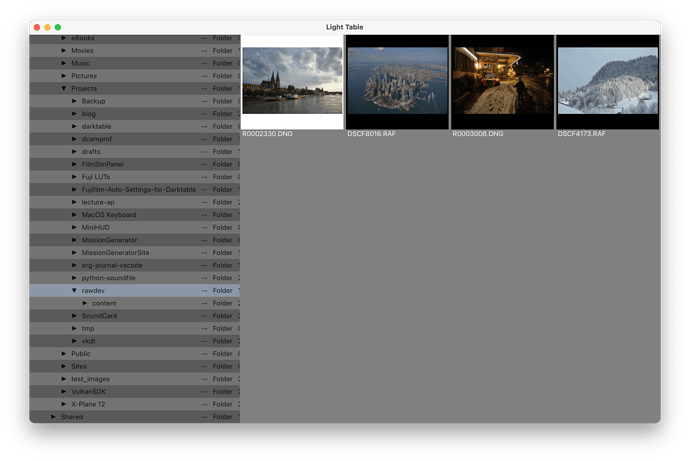

After another fruitful evening of hacking, we now have a very rudimentary file browser. As with most parts of this program, it’s still very much unpolished and in a rough state. But I can now browse a directory full of raw files, and double-click to open them in the darkroom. Another step closer to be able to a usable raw editor.

At the moment, the light table is its own window. This is pretty convenient for on two monitors. But we’ll see if it remains this way. A unified interface might make more sense.

I chose Pyside/QML as my technologies, as I was somewhat familiar with them, and they allow for rapid prototyping. But this remains double-edged sword. On the one hand, Pyside/QML allows for very fast iteration, and comes with loads of infrastructure that just works. On the other hand, the complete lack of a debugger is a bit of a nuisance, to say the least. And the interaction between Javascript/QML and Python/Pyside is a bit on the awkward side as well. But I do enjoy the fact that I can have a (somewhat) usable raw developer in less than 3k lines of code.

Interestingly, I’ve used Copilot AI extensively in the beginning of the project (translating programming languages mostly, and some scaffolding), but its usefulness has diminished significantly as the project grew. This was more or less my first try with an AI assistant. At this point, it’s still a nice tool to have, for the occasional repetitive line. But it no longer feels like a massive advantage.

And there’s not a day where I don’t think about when to open source this. But there’s always more essentials to fill in before I want to think about the cleanup necessary for the release. But fear not, it will come eventually.

As someone who can only do some basic HTML, visual basic and somewhat complex regex searches, I’m in awe of projects like this. I wouldn’t even know where to begin.

So, great job @bastibe and good luck. I’ll be watching with interest from the sidelines.

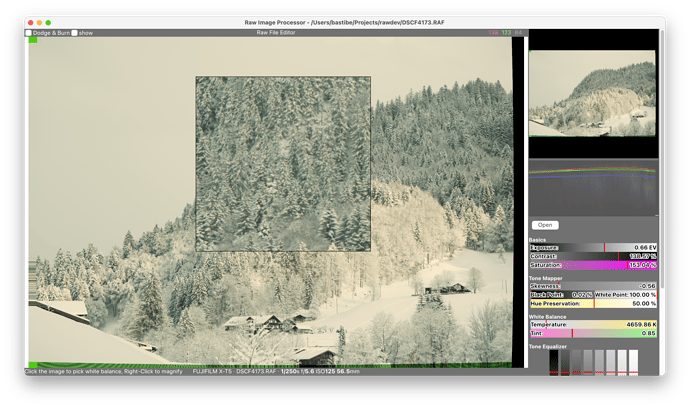

Life got in the way a bit, leaving less time for hacking. But several small improvements still happened: Right-dragging on the image now opens a 100% magnified view, and the light table browser is more usable now. That magnified view is oddly satisfying to use.

My idea is currently that there won’t be a way to zoom into the image beyond the magnification loupe. Pixel peeping needs to be abolished! (Or I’ll just change my mind later on. That’s also entirely plausible.)

Also, you can tell from the artifacts around the image that we don’t crop away the distortion-corrected corners yet.

Hello again,

it’s been a while.

I’d love to say that this project has still kept me up at night. I was working towards getting this ready to edit a batch of photos, but things got hectic and the program wasn’t ready, so I used Darktable instead.

And you know what, Darktable was just a joy to use. And using it made me realize just how many things I’d still need to implement to get this into a usable state. With life being very busy at the moment, that just wasn’t going to happen any time soon.

Heck, it took me almost three months just to switch the repo to public:

Still, this experiment has taught me a ton of things, and was great fun to work on! Not least of all, I got a whole new appreciation for Darktable and its algorithms. It truly is a marvel of engineering and artistry!

This is not the end. If you’d like to join the fun, feel free to collaborate on the program. I’ll support you in any way I can. But for the time being, I’m not actively working on the program myself. Who knows, perhaps when the days grow long next winter I’ll take another stab at it.

I suppose there is another plausible timeline in which I’d use the new Copilot Agent, and have it implement all the stuff I’m too lazy to do myself. But frankly, that would go completely counter the intention of the project and the ethos of open source development in general. So I won’t.