https://www.reuters.com/technology/microsoft-openai-planning-100-billion-data-center-project-information-reports-2024-03-29/

One estimate on LinkedIn gave a power consumption equivalent to that of about 2.5 million homes.

A few years ago there was this huge spamming of youtube kids content. Turns out multiple studios in third world countries recorded 100’s of animated films, using some cheap 3d game engine, that mashed together seo farming style all the popular kids keywords. So you’d have Elsa, Spiderman, Spongebob doing some weird shit together. I turned on one where thousands of spidermans were standing och a traintrack and being overrun. Some were even more offputting and violent.

I doubt AI will be more efficient than they were.

They aren’t giving up ![]()

“1. create a tech substitute for an existing industry. 2. Back this with venture capital funding so deep that massive losses can be sustained for years and years. 3. Aggressively compete against an existing industry which can not afford to operate at a loss for extended periods of time. 4. the existing industry is undercut until it falters or outright fails. 5. The disruptor(s), now having captured the market for the given industry, raise prices and reduce services to achieve profitability.”

Must admit I find the proliferation of generic A.I. illustrations on web content pretty nausea inducing. I’m sure it can be used in a better way but reality is most people are going to churn out sickly uncanny valley art and that will dominate.

You know it as soon as you see it. Like this:

While I generally and vehemently agree with article, one paragraph struck a note that didn’t quite ring true to me:

A.I. has no intelligence. It does not “think”. It is a predictive text program that simulates human expression by ingesting unfathomable amounts of data and trying to replicate that data. It does not know and can not know what meaning its outputs have. Further, it has no desire and no emotion to motivate action or decisions. It simply runs a program and assembles pixels or words to match what seems most like other correct pixels and words in its vast data set.

While obviously, matrix multiplications can’t have emotions, neither can nerve cells. From all we have seen in the last months, it seems pretty clear to me that these deep neural nets have quite a decent simulation of desires and motivations and reasoning abilities. Are these simulations “inferior” to our own? Are they “less real”? More importantly, are they less than the disenfranchised apathy of a third-world wage slave in an outsourcing sweatshop?

Philosophically, I don’t think so. I think we ourselves are also a mere “simulation” of an emotional, intelligent being, in the sense that we’re a sort of software construct running on a sort of computational substrate, but complex enough to be more than the sum of its parts. We are a bunch of nerve cells and supportive wetware pretending to be a person. Quite convincingly so, I might add. But we are not intelligent, we (sometimes) express intelligence. As do LLMs, to some extent.

What the author is complaining about really, is bad, cheap art, and outsourced labor taking their job. I forget who summarized it succinctly as, “the only people in danger of AI are prompt-completers themselves”. The danger of LLMs, I think, should mostly be viewed as just another form of out-sourcing. But denigrating it as “not intelligent” with “no emotion” is perhaps missing the point.

Spot on. In a way, for the general population, their entire lives are already filled with manufactured, “soulless” products, media and ideas. Unless we make it ourselves, or go out of our way to seek products created by people with complete artistic control, be it an auteur, musician, industrial engineers, journalists, etc, we will most generally be handed the generic “slop” product that’s just mass produced to make money or further someone’s interest. Not that these generic products can’t be good or even have something real or honest to them, but they rarely do.

Quite a strong deterministic world view ![]() Whilst I mostly agree, it’s always difficult accepting in our lack of free will and the consequences it brings…

Whilst I mostly agree, it’s always difficult accepting in our lack of free will and the consequences it brings…

I can’t even…

You may be right in the economic consequences of AI, but I think you are conflating product with process when you say AI creations are just as ‘real’ as the ones we make ourselves.

True, AI art may look convincing, even so far as you could conclude that they are equivalent to the work we make ourselves. But they are, underneath all that, just arranging pixels on a grid according to a lot of very complex statistical relationships. That is fundamentally different than what we do as artists.

The AI may ‘know’ that to create an image of a happy child it should arrange a cluster of flesh-toned pixels in such a way as to produce a u-shaped opening in the lower portion of the pixels corresponding to the child’s head. But it doesn’t have any understanding of what a smile is, or represents, or what it might suggest to the viewer. Just that it is a shape that is correlated with the phrases in the prompt. And that shortcoming will ultimately limit the capacity of AI to do anything truly original, or to develop genuine insight - they’ll always be constrained to reproduce variations on what has come before.

That may be so, but the neoliberal system we live in has been extraordinarily successful in degrading an ever increasing proportion of our labour into prompt-completion, in order to better isolate and control workers on the one hand, and constrain the narratives that dominate our world view on the other. AI is another step down this path.

Your position is that AI will only be a risk to people producing bad, cheap art. But these aren’t the people calling the shots, they’re the ones in the line of fire. It’s the people paying for the bad, cheap art: the bosses that decided that spending $100 for an AI image that is ‘good enough’ is better than paying a photographer $1000 to do something original. We’ve allowed those ghouls far too much control over the world we live in.

I agree completely. My point is that this behavior is the real problem, not AI.

Is it, though? When you’re drawing on Photoshop/Krita, are you not just “arranging pixels on a grid according to a lot of very complex statistical relationships”? Perhaps “statistical” is the wrong word for the chemo-electrical processes in our brains, but at the end of the day we are just pushing pixels by some hidden rules.

I fundamentally disagree. We long held that we’d have to take a machine seriously if it can pass the Turing Test. LLMs do pass the Turing Test. Now what? How can I know that your internal life is as deep and meaningful as my own? After all, I only know the inside of my own mind and can’t experience yours. All I see from you is a few text messages and the odd image. Same as LLMs. If I assume that you are capable of art, I must assume the same of LLMs. (Please don’t be offended. I’m using “you” merely as an example. I’m not trying to imply anything bad about you. I don’t know you, after all.)

If you cut open a human, there is no mind in there. It’s just guts and bones. Inside an LLM, there’s matrices and math. (I spent several years implementing these bad boys, so I know their guts fairly intimately). Why should one confer personhood and artistic intent but the other does not? I sincerely hope that there’s something about us humans that IS different, because otherwise, we’re currently doing something incredibly cruel to these LLMs.

Fascinating! Where do you see the connection with determinism? Indeed I do tend to think that we’re mostly predetermined in our actions, but the decision process is so hidden that this really makes no difference. We still have to make decisions based on incomplete information, and suffer the consequences. So even if nature is fully deterministic, we do retain a free will (to do as fate has written), if that makes sense. Besides, quantum uncertainty seems to cast into question a fully deterministic world.

The way you spoke about us being just software running on nerve cells and “wetware” is a deterministic view in my opinion. What do you mean by “as fate has written”? As time passes there are more and more studies about predictors of behavior such as prenatal behavior of the mother(like stress) leading to different and predictable34 behavior in children, hungry judge theory and so on, that makes one really wonder if we are in control of our lives at all. Of course this are all high level views and predicting the individual might never be feasible due to the sheer number of variables and data needed over time.

If the state of all molecules were known at one point in time, I’d wager we could predict the future with total accuracy (disregarding quantum uncertainty). In that sense, I think fate is fully predetermined.

I also agree that we’re relatively predictable animals on a macro level as well. As I’m getting closer to a management position at work, I am also becoming keenly aware how easily we are influenced by carefully crafted words.

Although I have to say (coming back to the thread’s topic), that the AI recommendation engines online have seriously let me down in the last few months. YouTube in particular seems to have completely lost track of what I like, so as to make their recommendations almost entirely useless. And don’t get me started on Google.

The AI “uncrop” feature in Photoshop is pretty neat, though (but has the usual AI jank. At one point I wanted it to extend some floor tiling, and it insisted on putting a disembodied hand on that piece of floor. Disturbingly.) The AI sharpening im Lightroom and Topaz, on the other hand, too often produces unnatural detail, so I’d rather take the soft photo.

I have noticed it is fairly consistent for me, although it seems a lot more “open” to change than it once did. If I click on a video a friend sent me, it will most likely start sending me recommendations around the topic of that video, even if I only watched it once. I guess the engineers keep trying to tune it to whatever will generate more clicks and this is what we get…

Off-topic here but that’s a major reason (one of several) why known-bad politicians can get reelected term after term after term. It’s all just manipulation. ![]()

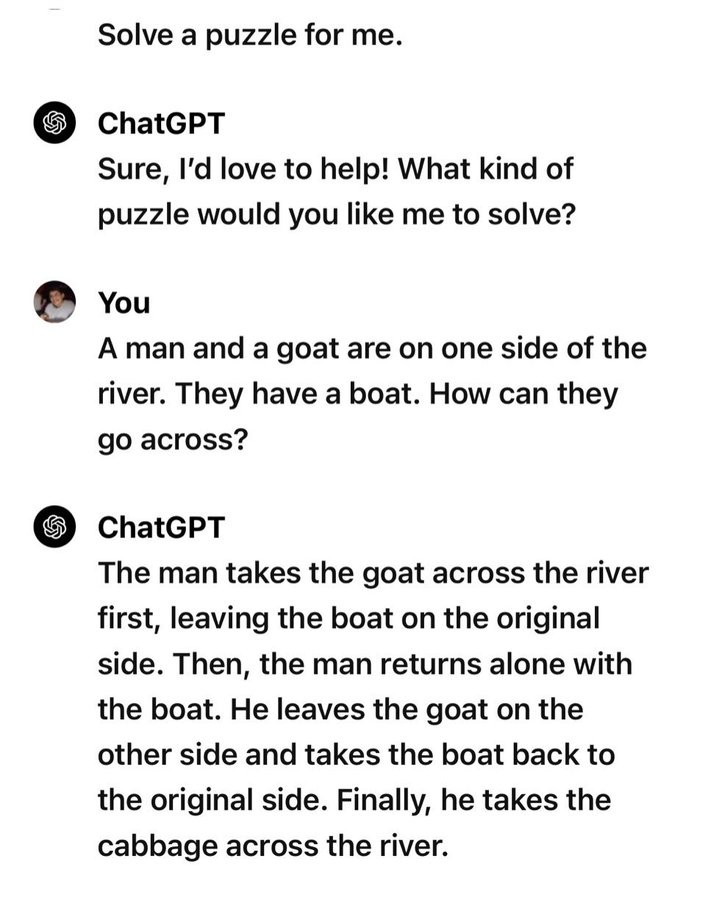

I thought this was a nice example of the difference between providing a statistically plausible product via fancy math as implemented in AI, and what humans do when they actually understand something. Not an image, but instructive nevertheless.

Stephen Grossberg interview here being pretty sceptical of the abilities of deep learning models used in LLMs.

A lot of the background he discusses earlier in the interview is way beyond me but interesting to see a different perspective from a figure of some apparent authority. I find the interviews on The Dissenter (terrible name) podcast or on the YouTube version here interesting in general, in case others might enjoy.

Before exam time, my school teachers drummed into us: “Read the question carefully. Answer the question that is asked, NOT the question you think is being asked, or the question you would prefer was being asked.”

Gerk…

I have to say, I am terribly glad do have gotten out of academic writing and teaching before the advent of AI.

I’d have had to rework my lecture on programming entirely, for AI-assisted students. I now have a colleague who codes with AI’s help, and I’m not sure if that’s a boon or a liability. I am particularly worried about their growth as a programmer; it’s fine to be a beginner in the beginning, but one has to grow up. I worry whether over-reliance on AI won’t shunt their growth.

My area of research (audio signal processing) had already been eaten alive by neural network-based methods. Increasingly, it felt like it was the machines that gained knowledge in these publications, not the scientists. Seeing how papers are now written by machines as well, I’m glad I got out when I did. The academic treadmill was enough of a meat-grinder as it was.

In German, “science” translates to “Wissenschaft”, “knowledge-making”. AI does not make knowledge. While working on automatic speech recognition for a few years, I’ve read a number of papers on the topic, and had to -rudely- conclude that the science of AI training in general is in a rough, rough state. Practically no reproducibility, no source code releases, just wild claims and shoddy comparison methods. Reading these publications left me flabbergasted, compared to my more rigorous experiences in Signal Processing.

And yet, I wonder if I’m missing out in not using LLMs in my work. It may be a career-limiting move to ignore these technologies. Or maybe not. Perhaps the organic, artisinal coder will always be in demand, in a sea of all the prompt-completers of the world. I sure hope so. I like my craft.

Interesting. Thanks

IMO the problem with novice programmers using ML-generated code snippets is that frequently it will result in code that looks OK (syntactically correct, compiles, runs), but is not necessarily correct because no deep understanding went into its construction.

Code like this leads to the nastiest bugs that take a lot of effort to identify and fix, because the code in question will probably work in a lot of cases, and you either have to use extensive random generated test frameworks of go over the code line by line.

This is not to say that humans do not err, they make mistakes all the time. But those mistakes are quite different and lack of understanding usually manifests very prominently (eg a someone who does not understand a language will not be able to code in it).

The situation is not unlike ML-generated text: it is grammatically correct and uses the appropriate vocabulary, which are signals we associate with experts so we tend to accept it at face value even if it is wrong or meaningless, and figuring out the latter takes a lot of effort.

From a practical perspective though, it may be very difficult to ensure that the programmers one hires do not use ML. Looking at past work may help, but the industry is practically defenseless against this at the moment.