I took my pictures with a Sony RX100 Mark 1 which has a 1’’ sensor and a Zeiss Vario-Sonnar 10.4 - 37.1 mm lens, equivalent to 28 - 100 mm full frame. The lens is very compact, retracting to less than 3 cm thickness, so the designers had to make heavy compromises on geometric distortion and vignetting to get a 1.8 - 4.9 aperture range. Here is a picture taken at 10.4 mm focal length and f 1.8 where vignetting is worst (this and all following pictures were processed in RT without applying any tone curve):

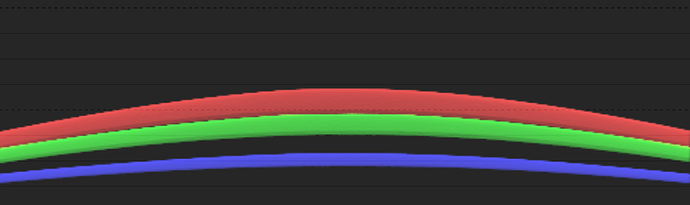

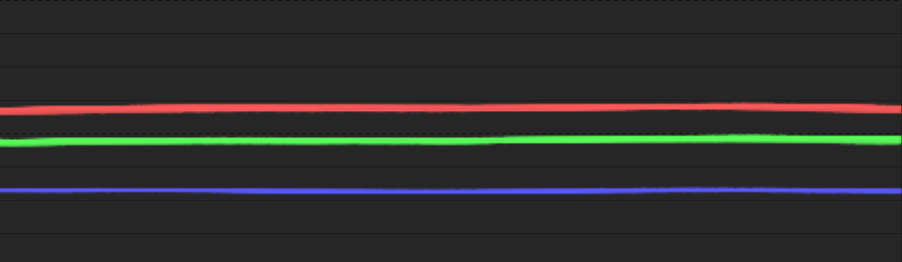

The estimate of 15% less illumination in the corners due to the thicker material at 35 mm focal length equivalent applies for a transparent sheet with a light absorbing dye, such as a neutral filter, but not to the translucent sheets which contain light scattering particles. The scattering within the sheet is amazingly homogeneous, as can be seen by the following pictures taken at night with the lens pointing upwards and the sheet being illuminated by a LED street lamp to the left, outside of the field of view, which without the sheet would have been only dark night sky.

I took a second picture with the camera rotated by 180°, so the street lamp was to the right. As you can see, there is no apparent difference, although the sheet is illuminated from the other side.

Correcting vignetting from a picture of an object requires a flat surface of even colour which scatters light evenly (i.e. it is matte and not glossy or reflecting) as well as even lighting of the surface.

With direct sunlight or another point light source at a sufficiently large distance, you will get even lighting. However, since you have to take the picture from the side to cast no shadow, you are prone to get brightness differences from non-uniform scattering. Here is an example of a flat gray polyethylene surface (the bottom of a collapsible crate) I photographed at 10.4 mm and f 2.8. In addition to the vignetting from the lens, you see the brightness falling from the top left to the bottom right hand side (the sun was in the back upwards to the left).

With a closed cloud cover, you can in principle get more even lighting from all directions to avoid problems with non-uniform scattering. I tried this with the following setup of a flat white cardboard sheet on top of a hill to get illumination only by distant clouds. The view is towards the position of the sun behind the clouds:

I wore off white pants and a white lab coat to prevent casting of shadows, stood at the side to the right in the picture and held the camera over the sheet pointing straight down for the first picture.

For the second picture, I rotated the cardboard sheet by 180°.

For the third picture, I rotated the camera by 180°, with the camera bottom pointing away from me (for all other pictures, the camera bottom was pointing towards me).

For the fourth picture, I walked around the setup to take the photo from the opposite side.

All pictures were taken at 10.4 mm, f 2.2 and focus distance at infinity.

Here are the pictures in order:

Upon close inspection you will see that the brightness distribution is the same in the first, second and fourth pictures with identical vignetting and colour casts in the corners and the same brightness fall-off from top to bottom, whereas in the third picture, the brightness fall-off is in the opposite direction from bottom to top. The brightness fall-off is apparently due to me blocking off light coming from the side where I was standing, wheres the direction to the sun had no observable influence.

Now here is the second picture taken of the cardboard flat field corrected with a picture taken through the acrylic sheet into a cloud covered sky at the same focal length and aperture:

At close inspection, you can see that, apart from the brightness fall-off from top to bottom, there is some over-correction of the vignetting, which confirms the observation that pictures taken through the acrylic sheet show darkening towards the corners in addition to the vignetting by the lens.

Since I observe the same additional darkening for the pictures taken through the acrylic sheet evenly illuminated only by a light source outside the field of view, this cannot be caused by different light path lengths within the sheet.

I have a working hypothesis for a possible cause and ordered more acrylic sheets to check it. I will post again when I can confirm it by experiment.