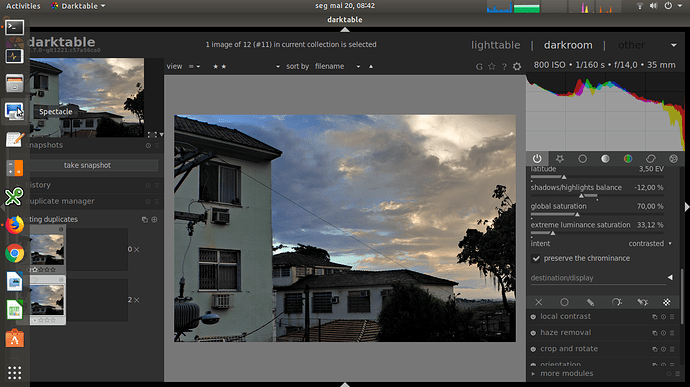

I tried the workaround on this image, and it seems to work much better. Yet the cyan sky is still there…

Yep. I’ll even tell you more – I can’t tell for sure that the sky wasn’t cyan that day, because the light was quite unusual…

The one million question is if we really want accurate colors to be reproduced or we’d rather prefer a purposely harmonized film-like look? I’d say that we want both, depending upon our intentions about the very shot we’re developing, so I would personally like to know if the color is accurate. What I can say is that basecurve-based development gives different colors from the other development possibilities. Dt has the profile for my camera, so I’d assume it to be accurate color-wise (however some portraits tend to show the opposite). Unless the future development takes place, of course – which is most probably the case. However, if we don’t shoot some special-purpose pro shots, who cares if there is an unobvious color shift? In some weather conditions the colors may change every few minutes. Let apart that watching conditions matter as well. Eventually I’d say I want skin tones to be as accurate as possible and the other colors shall not have noticeable shifts. That’s a bare minimum I’d demand from RAW processing software. Yet considering that current dt trends to become a full fledged pro tool, the expectations are higher.

@anon41087856, first of all let me thank you for your efforts to improve dt and to make it a pro-grade development software. It’s much appreciated. However there are some steps to take before it comes true in full… as usual…

Maybe in this case this two shall be combined (or OR-ed) somehow? That’s the part I don’t really comprehend. I understand that RGB values of every pixel shall finally be set “in place” in the colorspace we output the image in, be it a file or an image on the dt screen, or whatever. Isn’t it an output profile’s job to do such a conversion? Why have you think about it earlier in a pipeline? If this approach has some issues (and it obviously has), maybe it’s time to reconsider output color profile module? And more generally, what’s wrong with Lab colorspace as internal representation? Lack of proper math? Human perception issues that have to be corrected somehow? That’s enigmatic to me and looks as a complete nonsence…

This is the part that worries me the most. Let alone chromatic aberrations (however, even it this case there might be issues, for you’re not always able to get rid of them completely). As for input profiles – dt has my camera type profiled. Shall I consider this profile “good” or “bad”, provided that another camera copy was actually profiled? Can’t filmic extract some coefficients from there to fine-tune the math?

And here comes thing I don’t understand at all: “color artifacts”. What are they? Blown channel in highlights definitely is. But they can be found on majority of shots, especially made with cheaper camera models. Is filmic usable with such shots? If not, can it be fixed somehow? Can the math be made more stable under circumstances?

As for color balance, here things start to have even less sense to my. There is no such thing as “right” or “kinked” color balance, is there? What color temperature is supposed to be “right”? Light conditions differ dramatically. Is unusual light color an artifact? Sunset light? If so, light of what color temperature is safe to use filmic? What about the following shots? Will filmic ever be able to cope with such a light?

This?

Or this?

If not, that’s a pity, because that kind of shots would demand filmic features at most…

Currently the filmic produces great results on some shots while destroys the other, so it’s kind of hit-and-miss at the moment…

Glad you enjoy. It’s just a workaround I figured out, it’s not absolutely accurate.

Regarding your image, is WB ok in this image? I mean, if it’s set to camera value then it might be off, because camera measures WB on the brightest point. If the point is on a white surface then WB is +/- accurate, if not, like here on non-gray pedestal, then WB would be well off an accurate value.

UPD: Yes, you had wrong WB setting. I set it on the light projector under the pole (neutral, no paint), and look at the image now - it’s natural, without a pink cast. Always make sure your basic settings are correct  )

)

Actually not, sorry)) It looks more natural indeed, but no way it is right. The light was pronounced yellow that day casting everything it falls onto, so checking with light projector isn’t accurate, too, because it was illuminated with the yellow light, has reflections from the grass, etc. So with the right WB it must have some yellow and green casts. I’ve surely checked the projector when trying to get rid of color cast before starting this thread. That’s the problem with the WB: it has to correspond to an (unknown) light source, not the theoretic white point at 6500K or whatever and it’s impossible to find it out later on – unless you shoot a greycard before each shot. (Please check my previous post here for some more on this). And the sky cast is still there on your last try. So yes, it looks more natural. No, that’s not what I was shooting.

UPDATE: And even worse – I suddenly realized that even shooting a grey card won’t help, because it’s intended to eliminate a color cast while I want to keep it. And maybe even emphasize in some cases…

@kanyck , I think in this post you’ve hit on what I think is the essential problem - for this image, there’s no reference for “accurate” if you don’t have light-referenced white balance information. What you’re chasing for this image is rooted in your particular perception of the scene, which is not colorimetrically “accurate”.

Your camera has a spectral response that is influenced by a bunch of design decisions, and that response is referenced to human expectation only through a transform to a white balance corresponding to a known reference in the scene. If that transform doesn’t meet your expectations regarding what you remember of the scene, the subsequent transform to do so is more about a particular “look”.

FWIW…

Lab is an attempt from 1976-ish to describe how humans percieve colors regarding to luminance. It is a color model that is not supposed to be used as a pixel-pushing space. Besides, it is not really hue linear, meaning pushing L at the same a and b coordinates does not actually preserve hues.

What filmic does is separated from color space conversion, since it is essentially squeezing HDR in LDR in a pleasing way. Doing that, the RGB primaries have an impact, so not every RGB space is a suitable one (as I have learned the hard way). The problem of dt is it sits in an ICC display-referred workflow, and doing stuff out of that framework (because ICC sucks at HDR) needs ugly workarounds (compared to @Carmelo_DrRaw 's Photoflow, which is developped cleanly from scratch). So the challenge is to try doing scene-referred stuff connected to a mostly display-referred pipe grounded in ICC color management.

Good or bad profiles can be measured with the delta E between the theorical values of a color chart and the one from a raw picture of said chart with the profile applied. There is no math to fine-tune. The profile is accurate or not. If not, you carry the inaccuracies along the pipe, possibly amplify them, until they blow up in your face. I personnaly have stopped using self-made color profiles since I have noticed they don’t change much in nice cases and blow up big time in hard ones (when you need them the most).

Color artifacts are chromatic aberrations, parasite color casts, out of gamut lighting (blue LED spotlights will consistently fail, but it’s not just filmic, it’s digital imagery in general), blown channels, poor input color profiles, etc.

Yes there is. Color profiles (input or output) assume a standard illuminant (D50 or D65). The correction they make is accurate only if the data are corrected to this illuminant before, otherwise their values are wrong. So you need to neutralize the white balance (make white look white, e.g. a = b = 0), apply the profile, then possibly apply a back-transform (with color balance module, for example) to restore the orange cast if you want, but later in the pipe. Otherwise, your whole input profile is pointless.

You need to understand that what your camera sees is not what you see, because it doesn’t see the same way. Digital imagery get along with that by doing numerous assumptions while wiring bits to make a pixelpipe. The failure of the whole digital photo workflow is to bury these assumptions deep inside the soft (photographers are supposed to not be engineers, it seems) such that, when you don’t fit the correct assumptions at the right time, you have no way to understand that the soft works as expected, but you are actually using it wrong.

@ggbutcher, I didn’t mean to dig that deep, but you’re absolutely right. You either have to take colorimeter on each shooting (not the gray card, that was my mistake), or you’ve on your own in the middle of nowhere relying upon your memories… But the good news is that in 99.9% of the cases we don’t really need the colorimetric accuracy. Processing the picture to create about the same feeling as you’ve had when shooting is the most frequent (and reasonable) goal. So leaving precise accuracy to special purposes, we still need a way to represent the scene as accurate as possible first, then to massage the image until you get the proper inner response while looking onto it. Which I consider to be a very good approximation to a “good photography”. FTOH, say, a noticeable cast may easily ruin the whole picture.

Think of film ages. The film makers while creating the particular film type considered not only the physical (chemical, etc) restrictions, but also human eye’s perseption and also which color combination would be considered as pleasant and which wouldn’t, and put it into film design, sacrificing the accuracy for a pleasant look. So no, I’m not aiming at true color accuracy. And yes, I’d like to retain as much control as possible on my level of knowlege.

So daylight or tungsten color, then trying to color correct with a filter at the scene, then into the darkroom to print test strips and turn knobs until I got something pleasing?

Not exactly, AFAIU the whole story, the films were designed in such a way that they produced a pleasant-looking output more or less by themselves if you did the whole process right. Because there were no digital editing invented yet. That was the point…

“Colour artifacts” and properties that are outside of the bounds of filmic's underlying assumptions give it something that it doesn’t expect. Unlike humans, algorithms can’t tell the difference in what is good or bad. It just computes (and compares, if you are talking about machine learning). Modern algorithms account for inconsistencies and outliers by trying to remove their influence. However, implementing such is difficult because there is no such thing as an all encompassing strategy.

The best thing to do is to deal with these issues before applying filmic. With the right pre processing, it will work.

Okay, I need some more time to fully understand what @anon41087856 replied but in general what I learned from the discussion is the following.

- There always are some errors in color reproduction generated with inaccurate color profiles, WB and/or luma settings, color space design flaws etc. that would accumulate throughout the pixelpipe and may reach values that are visible or even unacceptable. Which is quite obvious, though. Also, there are some color space and/or frameworks design flaws that add up to human color perception nuances to make the proper implementation really hard. I supposed that might be the case but it’s my first time I bumped onto its consequences in practice (and the hard way).

- Dt is implemented using a framework that can hardly deal with HDR in a proper and clean way. That’s a bad news, provided that most current cameras have dynamic range 14+ stops that we must somehow squeeze into 8 stops of jpeg to display or publish. And I do like dt. Uhh.

- Due to algorithms used, filmic is more sensitive to that errors than most of the modules and prone to accumulate errors more quickly. Okay, it’s a pity but that’s what we have.

- In the current implementation there’s a known issue with chroma preservation that doesn’t have obvious solution at the moment but will be sorted out somehow in the future. Good, we may count on some improvement in the future.

- Filmic is by design unable to proprely deal with images with noticeable cast (like ones I posted earlier today), severely over/underexposed, having chromatic aberrations, etc. That’s the bad news, but at least I better understand its limitations.

- On regularly lighted scenes and with proper pre-processing filmic will most probably do its job quite well. So it would take some learning to squeeze the most out of its capabilities.

Did I forget something important?

The filmic implementation has two main issues in my opinion

The first is that it maps mid-gray 0.18 to 0.50 (tonemapping + inverse display gamma ), but the chroma preservation is useful only when a tone mapper operator maps 0.18 to 0.18.

example:

very high dynamic range frame exported from a samsung demo hdr

Chroma preservation without desaturation, so yes it looks wrong but the oversaturation is only in the highlights

The second issue is that in an earlier design of the tool there was an exposure slider, now it’s missing.

It is fundamental to use intuitively the filmic module.

For example increase the exposure of the photo and export as floating point linear

Reopen in darktable and use filmic

20170626_0028dt.tif.xmp (2.9 KB)

Sorry, but not sorry. Image in your head is an imprint with your own imaginary tone curve, it has nothing to do with the title of this discussion

@mosaster, technically you’re right. Practically I’d like to get the result closest to what I saw when shooting without any parasite color cast, and this is different story. In that sense the @age’s observations as well as processing result (s)he achieved is much more valuable to me in scope of this discussion. I can’t fully understand if his/her assumptions are valid, but it sounds like possible nailing the issue. Let devs judge on that…

@age, your processing is the closest to what I wanted to get. Thanks!

@kanyck I always look forward to @age’s processing. I suggest you examine his other works among the #processing:playraw threads.

There is no mapping if grey goes from 0.18 to 0.18, it’s a no-op. Filmic maps whatever grey value you want to 0.18^{1/\gamma}.

Not sure which one of the pics is filmic’s output, but that magenta sky is definitely out of this world and more likely out of any reasonable gamut.

You have an exposure module in darktable already, and you might want to correct exposure at the beginning of the pixelpipe (filmic comes at the end), and the grey value slider is equivalent to an exposure compensation.

If you look at the photos I posted here 2 days ago you’ll probably have to change your mind…