Unfortunately I can’t change the title of this topic to better reflect that the primary focus for me is the G’MIC OpenFX plugin (with Adobe plugins following shortly after that).

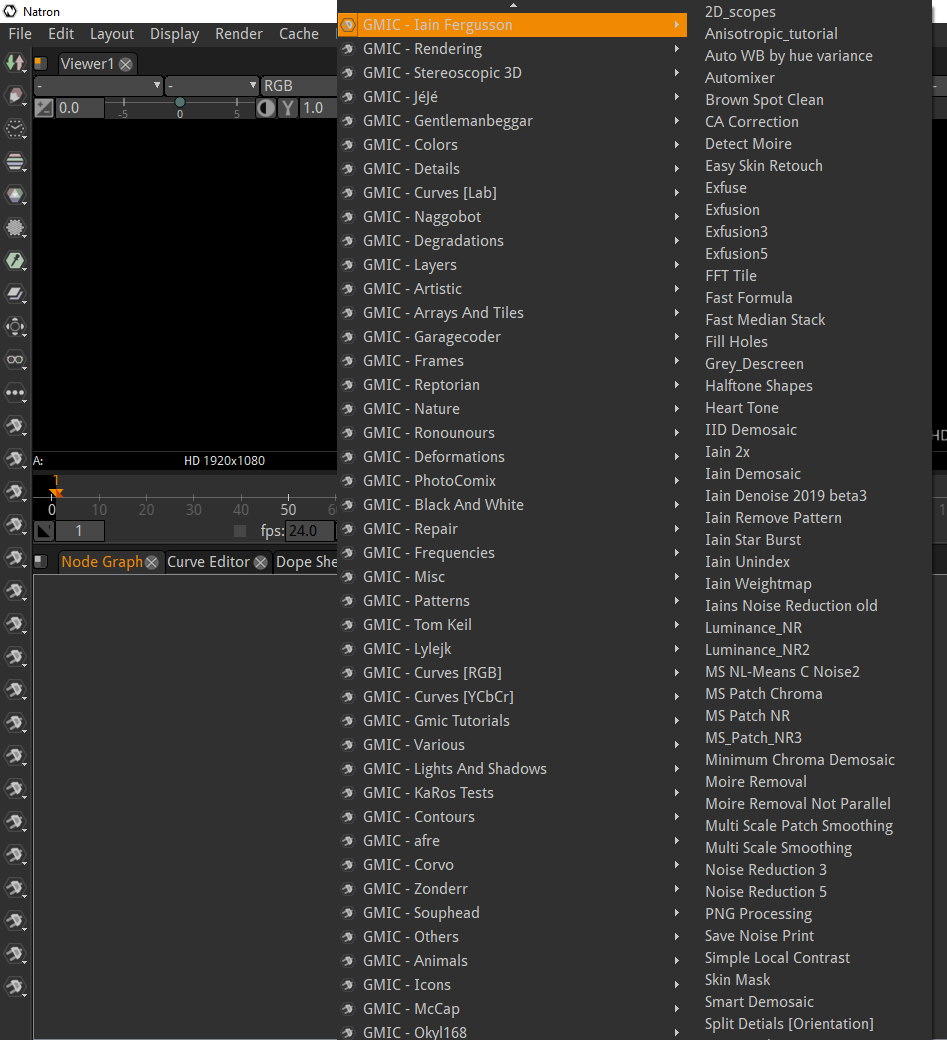

So I made good progress in the last days, I can now parse the latest 2.9.3 filters and make them available in hosts like Natron. “Unfortunately” even when skipping the about, animated, interactive, sequences, etc. filters that do not make in a render-only context, we end up with more than 1000 filters, which makes it quite difficult to present in a context-menu like in Natron or Nuke, see here:

I added the option to define a allow/deny list in a text file so a user can define which filters should appear as OFX plugins and which not, but the number is still overwhelming and we only have one division level (the category) in both OFX and G’MIC. So not sure yet how this will evolve in future.

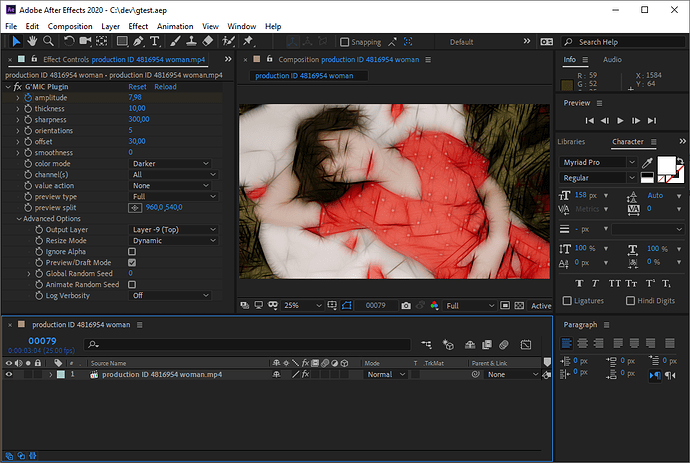

I am still testing functionality and whenever I find a plugin that is not working, I will run the command on the G’MIC CLI to see what the expected output should be.

In Natron, I often run into the dreaded “stack overflow” errors though. I patched the Natron binary to increase the stack size to 16MB, just like the G’MIC CLI has, but it seems it still happens from time to time.

If there is interest in testing the OFX plugin for G’MIC on Windows, let me know, then I will upload my current binaries here.