OK, here is a new round of tests. I was wrong about lens correction mattering (but I still think that when everything is correct it shouldn’t matter).

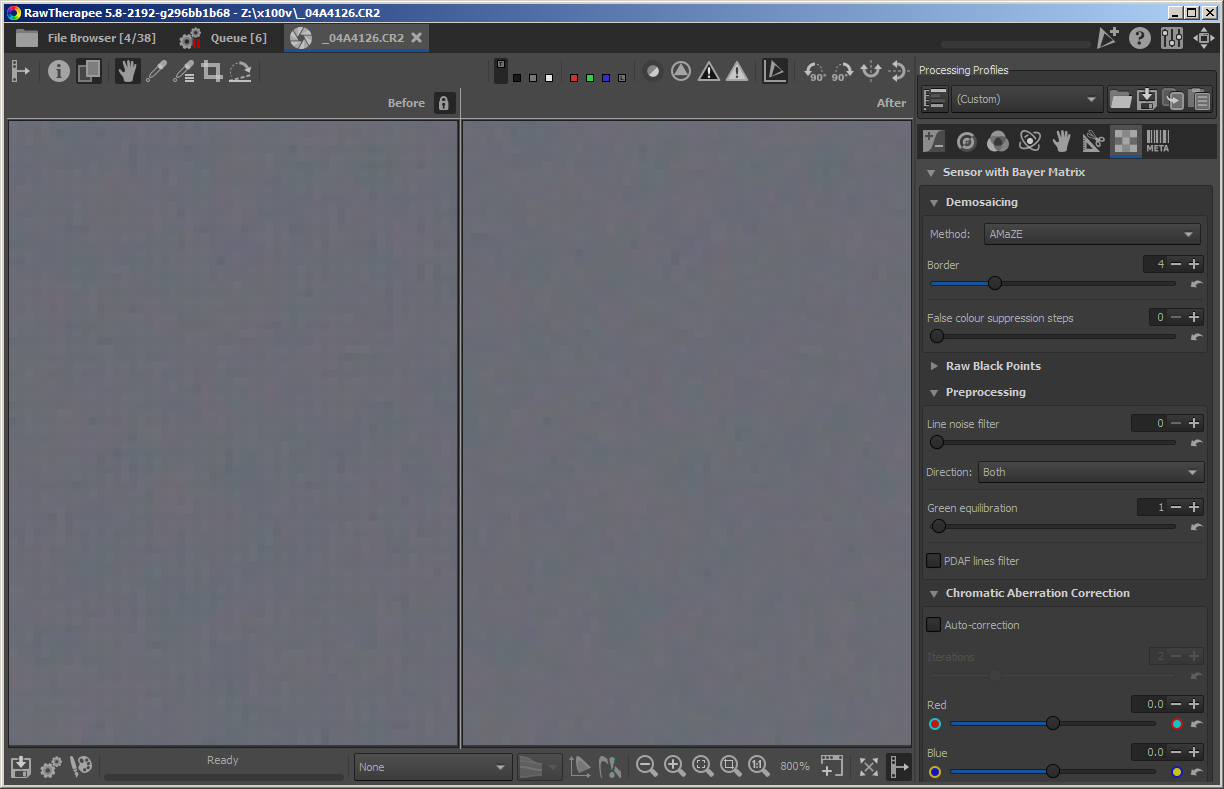

I tried 4 different settings for Raw Therapee 5.8 - 2178

- With, and without lens correction

- With and without white balance pre-processing

Here are the side cars

s1_04A4145.tif.out.pp3 (13.4 KB) s2_04A4126.tif.out.pp3 (13.4 KB) s3_04A4126.tif.out.pp3 (13.5 KB) s4_04A4126.tif.out.pp3 (13.5 KB)

Note I added a prefix “s1”, “s2” etc so they wont collide in the same directory.

Here is a summary for those who don’t want to dive into the sidecars - these are the only things that are different.

Settings 1

LcMode=none

LCPFile=

[RAW Preprocess WB]

Mode=0

Settings 2

LcMode=none

LCPFile=

[RAW Preprocess WB]

Mode=1

Settings 3

LcMode=lcp

LCPFile=C:\ProgramData\Adobe\CameraRaw\LensProfiles\1.0\Zeiss\Canon\Canon (Zeiss Milvus 2_50M ZE) - RAW.lcp

[RAW Preprocess WB]

Mode=0

Settings 4

LcMode=lcp

LCPFile=C:\ProgramData\Adobe\CameraRaw\LensProfiles\1.0\Zeiss\Canon\Canon (Zeiss Milvus 2_50M ZE) - RAW.lcp

[RAW Preprocess WB]

Mode=1

As per posts above, the odd phenomenon occurs for a set of 16 samples, 200 x 200 each, from the center of the frame.

On darktable and other raw converters these are fine.

On RT they are fine for ISO = 200, 400, 800, 1600, 3200

But on RT for ISO 100 they act strangely.

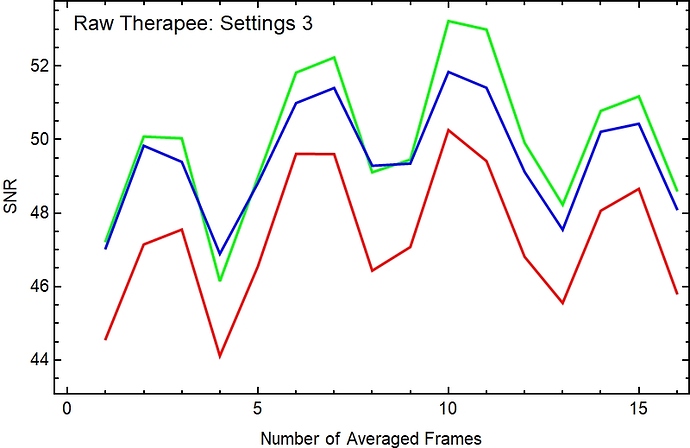

Here is a plot of the SNR for each of the 16 samples, for each of the different RT settings

As you can see, the are radically different.

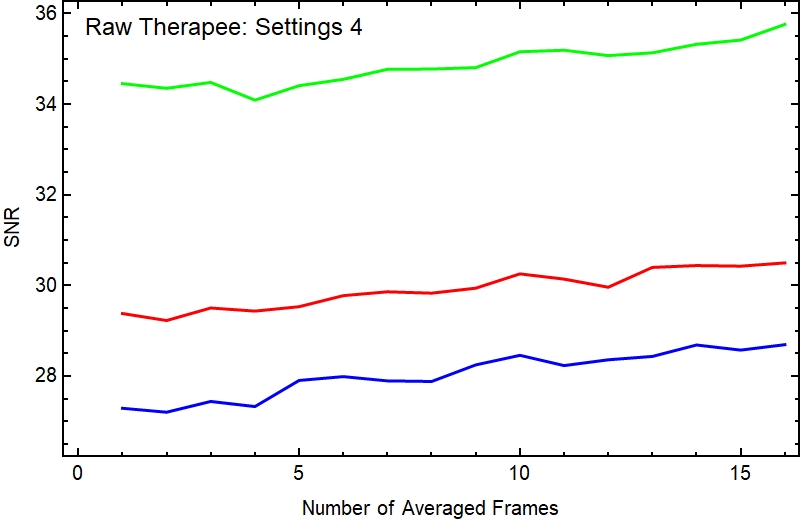

If we average these 16 sub-samples and look at the SNR of the averages we get graphs like this for settings 1, 2, and 4

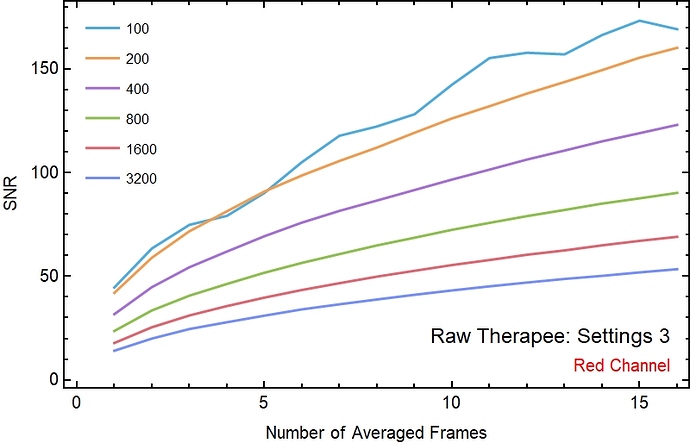

And we get this for settings 3

The iso 100 line (cyan blue) should increase roughly like the Sqrt[N] where N is the number of frames being averaged.

This is exactly what happens for all of the other ISO examples, for all four of the RT settings.

Instead, for settings 1,2,4 , RT produces something that has large amounts of correlated noise which cannot be decreased by averaging.

For setting 3, RT produces something that has weird lumpiness to the averaging.

Here is my interpretation:

-

Something is wrong with the RT output for ISO 100 output for all of the RT settings. It should act like the files for other ISO! There should be nothing about ISO 100 that causes problems.

-

The lens correction, which I had thought was harmless, does interact with the white-balance preprocessing Mode = 0, in some very strange way to make the weird case of Settings 3. But it does not act that way for Mode = 1.

-

Note also that I have the same lens correction with darktable and there seems to be no problem.

-

While the way that I arrived at these (trying to measure SNR for different ISO, by photographing an out of focus board) may seem weird and invalid to some of you, that really doesn’t matter here. Think of my stuff as running an artificial test - like a canary in a coal mine - that is sensitive to the low level noise structure of the converted file.

What I seem to have found is that RT has some very odd dependence on ISO, white balance and lens correction.

It could be that there is some RT setting which would make this all better, but I can’t think of what it is.

For example, why should ISO 100 be singled out as the weird one?

Here is a wild guess: RT is introducing non-random correlated noise of a certain amplitude. For ISO 200 and above, the camera noise dominates but for ISO 100 the mistakes are large compared to the native noise.

Others on this forum are much more expert with RT than I am, and hopefully can tell me if there is a setting that I should try.

Alternatively, darktable seems to get the right answer no matter what. So I could just move on and use darktable, but it is significantly slower than RT.