I am experimenting with a HDR workflow based on manipulating the sigmoid module.

I have only recently started doing HDR processing. I have a decent SDR display that works well with darktable. I knew darktable could export to HDR, but I didn’t have a reliable HDR display for reference until I got a MacBook Pro.

To solve the problem I have encountered and I’m sure by others in making HDR exports:

- Module defaults and presets do not work. A lot of tweaking to get the correct exposure and tone.

- Likewise with sliders. Big adjustments often need to go outside the standard range.

I could get around the above using multiple modules, parametric masks etc. But it is a complicated workflow that is hard to replicate across different files. And a lot of tedious work before getting to the fun (creative) edits.

My aim was to simplify and do the technical processing with the least amount of modules. I.e. mapping the mid-tones, shadows, and high lights to where they should be on the output, or at least within the ballpark. I wanted to see if I could shape the sigmoid to approximate the HLG curve.

It’s a similar logic to the filmic (or normal use of the sigmoid) module, which provides a consistent (parametric) way of mapping a HDR input to an SDR output. I like filmic and as it matured I found the less I played with it the better the result. And I have moved to sigmoid because I pretty much only use it with the default preset when processing for SDR.

Setting up Sigmoid

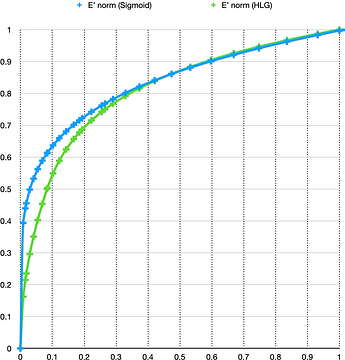

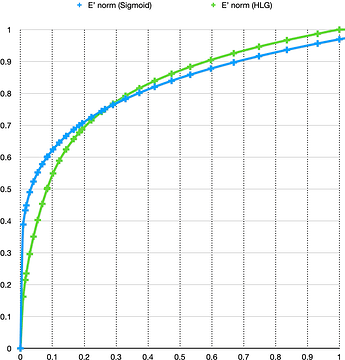

Using the app by @jandren :

I eyeballed the sigmoid against the ACES HLG. The curves have similar dy/dx through the range. They don’t converge at either end, but I figured the important part was having the same slope and position in the middle. The sigmoid has a more aggressive roll off in the highlights which isn’t necessarily a bad thing for HDR photos.

Arriving at the following values to use in the sigmoid module:

Target black -13 (default)

Target white 12

Contrast 1.45

Skew -0.55

I left the default target black as-is in darktable, as I am not sure how the value translated from the web app to darktable. Colour processing is by RGB ratio.

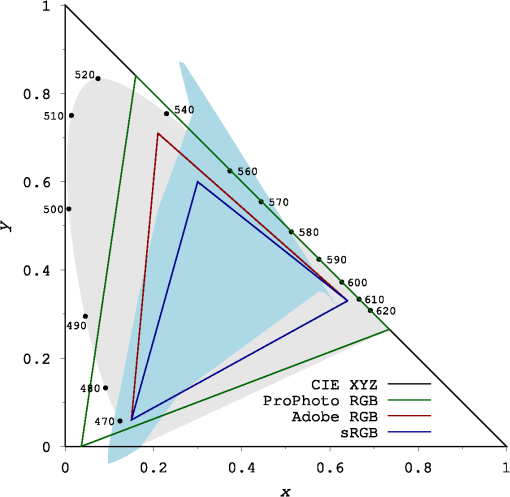

Output colour profile

I have been using HLG P3 as the HDR profile in darktable. This appears to be the profile used by the iPhone, and the built-in apps in macOS deal with it quite well (as expected).

I find darktable behave strangely when targeting PQ, the black point is raised and the histogram distribution is skewed towards the highlights.

Processing

The processing experience so far has been good. There may still be aggressive use of adjustments in exposure, tone equaliser, or perceptual brilliance (colour balance rgb). But I haven’t found the urge to stack modules or use masks. And the adjustments in these modules feel more progressive and smooth. Responsive without being twitchy.

My approach is similar to my SDR edits, get the mid tones where I want. Adjust the shadows and highlights for the appropriate amount of detail. Since the intent is HDR export, I have to constantly remind myself not to edit for contrast.

While darktable working display is limited to sRGB and SDR on the Mac (and I understand it is backend limited by GTK3 so won’t change anytime soon), I find I can gauge the output to a decent degree by what I see in the photo rather than looking at the histogram.

The level of the shadows will drop slightly but still retain their details. The mid-tones stay as mid-tones but gain contrast and punch.

The highlights retain their details, push into HDR (but not too much). And if they look too pushed in the working display they will probably be too pushed and over bright in the HDR export.

Examples (Work on Chrome based browser)

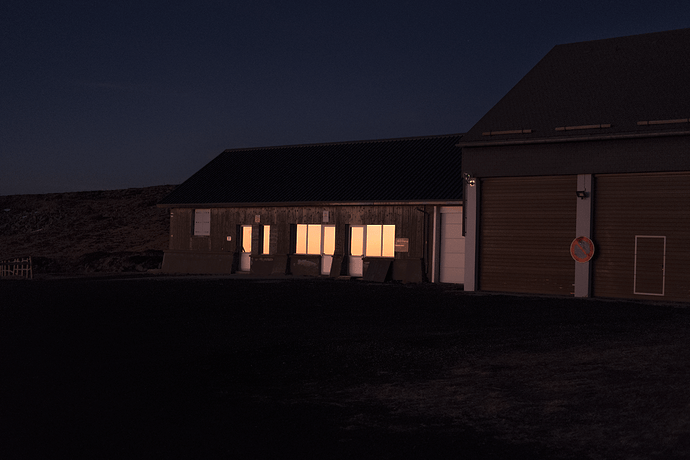

I do not have many raw files that I feel would look nice in HDR, and I really liked these from the community. The JPEG is what the darktable working display looks like.

Using @gigaturbo - Sunset reflection

https://discuss.pixls.us/uploads/short-url/qdgcI3PREDNX9q6XGWoqiWZgPQj.RAF

DSCF6376_01.RAF.xmp (13.2 KB)

Using @streetfighter - Evening Commute

https://discuss.pixls.us/uploads/short-url/tvaZEDKZ4wYO5piRzSepVBYV7Dm.RAF

20241017_0191.RAF.xmp (15.6 KB)

Using Kristoffer Tolle

DSCF4207.RAF.xmp (13.5 KB)