Thanks @hanatos for your replies !

I tried to compile it, but failed … I guess I’ll just monitor this thread until one day, something packaged automagically appears for noobs like me

Thanks @hanatos for your replies !

I tried to compile it, but failed … I guess I’ll just monitor this thread until one day, something packaged automagically appears for noobs like me

So you think it is easier to modify local contrast in old darktable so it works in linear RGB than backport local contrast from vkdt?

Guess what: after I wrote this comment I got an encouraging private message from someone stating that he was convinced that I could do it

Sure it was meant as a compliment to my overestimated intelligence when I don’t even understand what a Laplace pyramid is…

I did not mean that you should do it. Nevertheless I am sad that you are not developing old darktable any more.

Still playing with code, I’ve some questions and/or difficulties.

black point: yep, no control for that. should probably be added to the exposure module. however, i also have quite a bit of garbage intertwined in this module because i wasn’t willing to spend another module for colour transforms and white balancing. we should probably rip out exposure as a real exposure thing, so it can be combined with the “draw” module for dodging/burning, and have a “colorin” matrix/lut thing for the colour part.

would you have a .cfg or screenshot of your connectors for this second case? i’d like to reproduce and fix this. possible that the thumbnails graph conversion fails somehow, i wanted to touch this code again anyways (reuse the cache that already exists in dr mode, now it reprocesses the whole thing which costs a few ms when exiting dr mode).

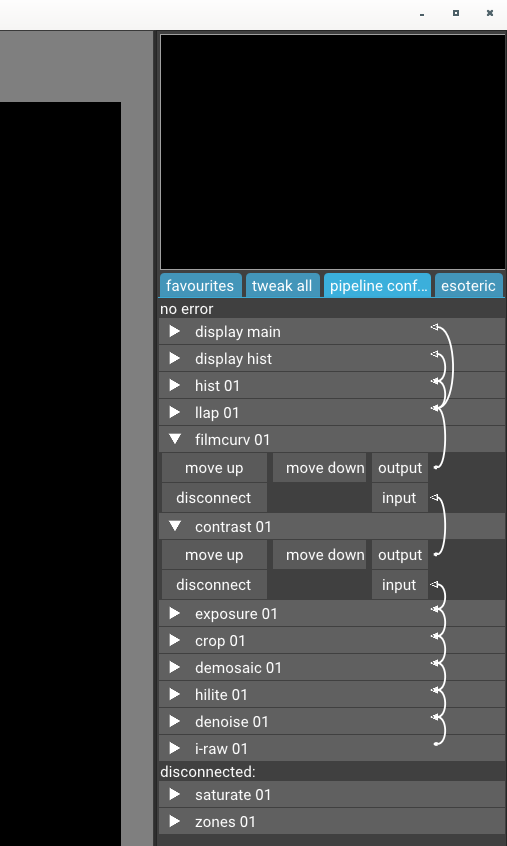

…just tried to reproduce. swapping llap/contrast and film curve modules works here. you sure you didn’t accidentally disconnect the display module? if the 1D visualisation in the gui is confusing, there is also “vkdt-cli --dump-modules” and “dot -Tpdf <” to visualise the graph in 2D.

you sure you didn’t accidentally disconnect the display module?

Normally not, because playing with the position it works, but only before hist and display.

All the other positions work in darkroom (not always but I haven’t captured the conditions) but produce black thumbnails.

Those which work:

011.NEF.cfg.txt (1.6 KB) 013.NEF.cfg.txt (849 Bytes) 014.NEF.cfg.txt (1.6 KB)

Those which show a black thumbnail:

016.NEF.cfg.txt (1.6 KB) 017.NEF.cfg.txt (1.6 KB) 021.NEF.cfg.txt (1.6 KB) 022.NEF.cfg.txt (1.7 KB)

Here an example where darkroom is black.

Using this default config: default-darkroom.cfg.txt (1.2 KB) and connecting contrast before filmcurv ending with 020.NEF.cfg.txt (1.6 KB) and

main.pdf (14.9 KB)

This black darkroom does not happen when contrast is already connected in default-darkroom.cfg.

aaah i see, sorry i thought you meant the local laplacian. the contrast module is the other one using the guided filter and a single pass, so it would only ever boost detail at one scale. i changed something maybe in the guided filter code and the module broke. i should probably look into getting it back. until then contrast is known broken…

(okay, this is stupid, broken code online. please pull and try again)

Your last commit is still on 8th June… ![]()

oops too many remotes, should be on github now too.

Works great now. No black image any more. Thanks !

What about contrast / radius ? Any issue or plan about it ?

hm good catch, it’s not wired. i’m not sure i have much plans for this module at all… anyways i pushed the radius wired to the slider. there may be one more piece of infrastructure missing to make this useful:

changing the radius of the blur a lot means that the pipeline needs to change (needs to insert more recursive blur kernels). this means that all memory allocations become void and if such an event happens the full graph needs to be rebuilt. to this end the modules should have a callback mechanism that allows them to return runflags when a value is changed, given old and new values of a certain parameter from the gui. i should try to find some time in the next couple of days and fix this in (i might remember all the modules that need it). currently the gui assumes no big changes take place when you push a slider (only for the crop buttons).

this api is now pushed. the contrast module has quite a few issues though. the filter seems to have weird border artifacts on some images. it is USM with a guided filter, not a real blur, so it sharpens textures and noise, mostly. i introduced a “threshold” parameter for it but can’t get good results with it. i might replace it by a regular USM blur (would also be faster), or do something else clever about it.

sidenote: i introduced an additional parameter, but old history stacks are still valid here, since they are explicitly named in the cfg and i didn’t change meaning of the old values.

Hello @hanatos

In a previous message of this post you compared the speed of Darktable (updating the preview of the thumbnails images) with your current work on the GPU side. Your code was much faster.

Just out of curiosity, have you checked one of the latest builds of Darktable?

In these past weeks there have been huge amount of improvements some of them related to its speed. Therefore, Darktable should be much faster now

hi silvio,

no, i did not do a new comparison. you’re referring mostly to the light table rendering speed? i don’t think olden dt can compete with the image processing (for thumbnails, rendering, or export). but also light table rendering is a non-issue if you’re doing it on hardware. this is fractions of a millisecond if you do it directly, vs carrying pixels through obscure channels in 8 bits through various helper libraries… you simply don’t need any of the single thread tricks.

Hello @hanatos

you’re referring mostly to the light table rendering speed?

Yep. You posted 2 videos and your code was much faster.

There has been a huge amount of improvements on this task on Darktable these past months but I suppose your GPU stuff is still faster ![]()

well yes, the videos were about thumbnail creation (so nothing much has changed for dt in that regard). lighttable rendering is pretty much free in vulkan, and it’s great that dt got improvements there.

Interesting: please post a sample.

okay, here are your numbers. i measured the time between two events in dt (the current darktable -d perf -d lighttable seems broken in git). typical outputs are like

vkdt

[perf] total frame time 0.000s

[perf] ui time 0.000s

[perf] total frame time 0.000s

[perf] ui time 0.000s

[perf] total frame time 0.000s

[perf] ui time 0.000s

[perf] total frame time 0.000s

[perf] ui time 0.000s

[perf] total frame time 0.000s

darktable

8.494874 [lighttable] expose took 0.0530 sec

8.537822 [lighttable] expose took 0.0429 sec

8.644052 [lighttable] expose took 0.1062 sec

8.711264 [lighttable] expose took 0.0672 sec

8.741537 [lighttable] expose took 0.0303 sec

8.762014 [lighttable] expose took 0.0205 sec

8.766640 [lighttable] expose took 0.0046 sec

8.826952 [lighttable] expose took 0.0603 sec

8.946266 [lighttable] expose took 0.1193 sec

this is on the same directory of raw files, both have precached thumbnails, both use fullscreen on 1440p with 6 images per row. but yes, drawing a few pixels in 2D is a non-issue, as i said. nothing very surprising or meaningful here.

in all fairness, the directory contains some regression tests with truncated raw, for which vkdt tries to load the raw/thumbnail and fails, which temporarily pushes up the frame times to like 6ms because it loads the thumbnail in the gui thread, because why not.

that said, i’m not worried about anything <16ms in this category (typical screen refresh rate).