As said, I am all for a super modular UI. I am tired of the constraints, which is why I use G’MIC CLI, though it is missing features like raw processing and other photographic goodies. The problem with CLI is that there is too much deleting, back spacing, typos, typing and keeping track of which images and parameter combinations to use. A flexible GUI would help make that easier. In sum, UI that sucks less?

Although I can’t say that it enables things like color management and 10-bit easy, I will say that QML is fast and smooth and point releases don’t break the API, and it makes hacking on the UI layout really fast and easy.

If there’s a way to embed a Vulkan view into it which would be capable of those, then I would definitely be interested in embedding it into Filmulator (presuming I can duplicate the Filmulator pipeline).

Depends on how many different types of folk you want to have using it. Also, how much time you want to spend doing UI shenanigans.

This is not a sales pitch by any means, but more my personal case study on this: using wxWidgets for rawproc has given me the appropriate balance of leverage and capability while not being too ‘bloaty’. Linux and Windows versions just work the same, almost no questions, 'cept for the occasional font foo that makes things fit differently in window panes. Nice set of cross-platform APIs for things like filename/path normalization, configuration files, etc. And, I just checked, my most recent Linux binary of rawproc is 13Mb, with wxWidgets statically compiled in. I’ve looked at Qt, yes, very ‘bloaty’. But, I’ve already had to write enough UI widgets to know I don’t want to make a career if it…

Regarding node-based pipeline depiction, consider this carefully. If you do envision a true ‘network’ of processing, where you can have either or both n-1 input and 1-n output situations a node graph is appropriate, but if the processing pipeline is truly a “pipe”, it’s a lot of UI work for not much benefit. I use a tree control to good effect, but a list may work as well.

Just some ruminating based on on person’s recent experience writing such from scratch…

(How) is it possible to zoom in or out of a photo when I have opened it in vkdt?

there’s no way scaring you away from using this, is there.  there are next to no features, but you cycle through zoom levels via middle mouse button. exit darkroom mode with ‘e’.

there are next to no features, but you cycle through zoom levels via middle mouse button. exit darkroom mode with ‘e’.

that is of course a reference to suckless.org , a philosophy i can agree with. and yes, i think the ui may need layers… to give all the power to people who need it and to hide complexity where possible.

have you tried immediate mode uis? QML sounds bloated to me… a lot like xml.

i really like your thoughts here. maybe a linear list is a good representation. most of the time that’s what it is. if not, it could be the topological sort of the graph, maybe with annotations in form of lines for connecting segments. need to experiment with that a bit to find out whether that’ll cause clutter.

pretty. are these colours correct or are some of the pinks blown highlights?

@hantos I know the UI/UX designer from Krita, I can certainly ask for his input when the time comes.

The code in QML is very concise with very little boilerplate.

For example, this is the code to draw a 30x30 black rectangle at (10,20) relative to its parent:

Rectangle {

id: rectName

x: 10

y: 20

width: 30

height: 30

color: "black"

}

And for something more advanced: if you want to make the color pulse to indicate an invalid input…

Rectangle {

id: rectName

x: 10

y: 20

width: 30

height: 30

color: "black"

SequentialAnimation on color {

running: input.invalid

loops: Animation.infinite

ColorAnimation {

from: "black"

to: "#FF8800"

duration: 1000

}

ColorAnimation {

from: "#FF8800"

to: "black"

duration: 1000

}

}

}

There are a few quirks in the API but from memory I think I have only two or so “dirty hacks” in the UI.

If you’re worried about performance, apparently there’s a way to ahead-of-time compile the code instead of having it be JIT compiled.

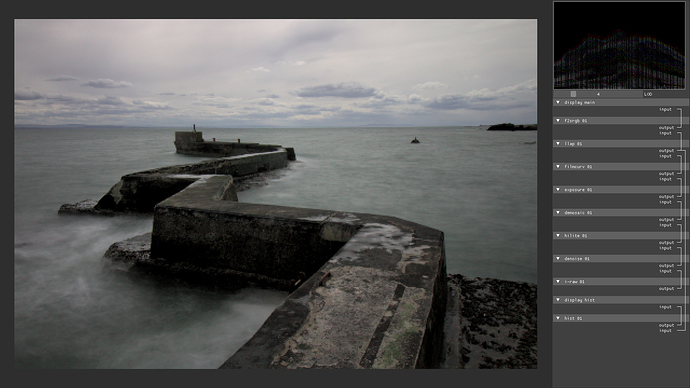

… yes, mostly a list. to illustrate what i mean, here’s a semi-functional mockup:

like this but in pretty, a bit larger, maybe with arrow heads/tails, colours, better indentation of lines etc.

to hide the complexity of the node graph/pipe configuration, one could simply cut off the gui portion to the right. the panels of the modules themselves could be filled with the parameters of the module, or with buttons for pipeline organisation in this mode (add/remove/reorder module).

changing module parameters would be a different mode, as would be the current “favourite” mode which just exposes some options of a selection of modules in a defined order, without explicit module ownership.

the graph shown here is my current default processing pipeline, not very interesting. at least you can see that the histogram shows linear values, not srgb, and that the film curve is applied before the local laplacian pyramid local contrast enhancement.

here’s a second one to illustrate more non linear data flow, at least not all connectors are named “input” or “output” (from examples/draw.cfg):

this one has no histogram wired but features a blend module with a drawn mask as input. by pure luck no wires are crossed here.

closing a module hides the connections:

not sure that’s a good idea (could point to the collapsed header instead) or if it’s even enough to reduce clutter.

anyways. keen to hear any comments.

They’re mostly blown highlights, but I find in this case they are not so disturbing.

Well I was angry because apparently the first time I compiled it was more or less luck/coincindence, I wanted to repeat it. And it seemed to be a good Question to bring to Debienna. Helmut and I tried to compile it for about 2 hours on Debain stable without success. Apparently it is not easy to compile it on any System but Debian testing/Sid or similar. Helmut said that it is possible to compile it on other Systems but one would Need to work a few hours on it, mostly to find the missing packages. Anyway the fact that the dependency list is very incomplete made a bad Impression on him and he doubted that vkdt was as fast as you Claim and that it will ever be something usable. I mean… you can imagine after 2 hours… LOL. The next day I tried again, I installed at least 100 packages on a clean Ubuntu, and it worked. And I could repeat it a second and a third time, but on Debian stable/MX it did not work.

Why woud I be scared? Does vkdt kill? I think you know by now that I am a very curious Person and not one of the People who give up easily.

However, I still see 2 Problems: first, vkdt is not as fast as I expected, it feels a Little slower than old darktable with OpenCL here, and the reason for this is probably the fact that it does not use my Nvdia Card, only the Intel. I know for sure that it does not use the Nvidia Card, it does not start on “optirun ./vkdt…”. But moving around the Region of interest at zoomed view indeed somehow feels different than old darktable, not faster, but different. Second, apparently it does not demosaic my orf’s in full size. I think the first click zooms in to 50%. But on the second click the Picture Looks pixelated, like zoomed to 200 or 400%.

Sorry for the capital letters, Edge autocorrects everything I write…

I am more optimistic than Helmut and I am curious what this will be eventually, although usually I am not an Optimist. so far it Looks like it is getting more and more similar to old darktable. That’s not bad.

Btw: although I do not understand many technical Details it’s really fun to follow this thread… the way you devs compliment each other etc etc ![]() None ranted here so far

None ranted here so far

Isn’t that better than telling bullshit about other devs work? If there is an improvment in dt or RT why should the other side not mention it as an improvement? That’s development and progress imho

debian stable? really? that is an interesting idea that did not appear to me. fwiw i think this kind of gpu pipeline will be ready for mainstream in like 5 years (because everybody will have good enough hardware by then and because it won’t be feature complete before that).

that said, if you have any more detailed logs on what you had to install i’d be happy to update the readme.

let me try to comment on these two:

- i made no effort to pass on any perf improvements to the user. this is not useful software, it’s a dev demo/prototype. it always computes the full image full res starting from the very beginning of the raw pipeline (compared to dt which for a 1080p screen and a 20MP image only ever processes 1MP at a time and only from the currently active module where parameters changed). and re: using the intel card… well that’s terrible. although the mx250 will probably not make it go all that much faster.

- it always computes the full image full res, no matter the zoom level (you can influence it using the LOD slider, however). it does zoom in to, i forget, 800 or 1600%.

Sorry, my mistake. I just re-checked and the first click really zooms in to 100%. Apparently it is misleading because there is just one side panel and so more of the whole image is visible than in other programs.

Meanwhile I solved the dual graphics issue, so vkdt can now use the Nvidia card. Turns out I just had to copy and paste a link to a file. Anyway, it is not faster with Nvidia, in fact the speed seems to be exactly the same with both devices. I think demosaicing a 16 MP raw takes 250-450 ms (depending on how many files there are in the directory? swapping?). I am a bit surprized since with old darktable there is a clear difference between with and without OpenCL. But 250 ms are probably quite fast.

I will make a list of the packages I had to install soon (but probably not today).

Btw, you have an amazing memory for hardware (names), you know better what kind of hardware I have than I.

hmm

[perf] query demosaic_down: 3.913 ms

[perf] query demosaic_gauss: 6.260 ms

[perf] query demosaic_splat: 67.699 ms

this is my 5yo laptop’s intel HD5500 on a 24MP image. your nvidia should really beat that by some significant margin (get the numbers by passing -d perf on the command line).

btw if you run -d qvk, you should get an output similar to

[qvk] dev 0: Intel(R) HD Graphics 5500 (Broadwell GT2)

[qvk] max number of allocations -1

[qvk] max image allocation size 16384 x 16384

[qvk] max uniform buffer range 134217728

[ERR] device does not support requested feature shaderStorageImageReadWithoutFormat, trying anyways

[qvk] picked device 0

helpful if you want to know which device it’s using. very well possible that vulkan doesn’t care about optirun at all. if you post your output that may be enough for me to wire a --qvk-dev <x> option or similar for you so you can force the correct device. also i might be more clever in auto-picking the better device, currently i think it always uses number zero.

yea, apparently Vulkan does not care about optirun

optirun ./vkdt -d qvk /media/anna/WINDOWS/Bilder/2019-07-20/P7200083.ORF

[ERR] module shared has no connectors!

[gui] vk extension required by SDL2:

[gui] VK_KHR_surface

[gui] VK_KHR_xlib_surface

[qvk] dev 0: Intel(R) HD Graphics (Ice Lake 8x8 GT2)

[qvk] max number of allocations -1

[qvk] max image allocation size 16384 x 16384

[qvk] max uniform buffer range 134217728

[ERR] device does not support requested feature shaderStorageImageReadWithoutFormat, trying anyways

[ERR] device does not support requested feature shaderFloat64, trying anyways

[ERR] device does not support requested feature shaderInt64, trying anyways

[qvk] dev 1: Intel(R) HD Graphics (Ice Lake 8x8 GT2)

[qvk] max number of allocations -1

[qvk] max image allocation size 16384 x 16384

[qvk] max uniform buffer range 134217728

[ERR] device does not support requested feature shaderStorageImageReadWithoutFormat, trying anyways

[ERR] device does not support requested feature shaderFloat64, trying anyways

[ERR] device does not support requested feature shaderInt64, trying anyways

[qvk] picked device 0

[qvk] num queue families: 1

[qvk] num surface formats: 2

[qvk] available surface formats:

[qvk] B8G8R8A8_SRGB

[qvk] B8G8R8A8_UNORM

[qvk] colour space: 0

[perf] [thm] ran graph in 1ms

[ERR] individual config /media/anna/WINDOWS/Bilder/2019-07-20/P7200083.ORF.cfg not found, loading default!

[rawspeed] load /media/anna/WINDOWS/Bilder/2019-07-20/P7200083.ORF in 233ms

But I am confused: device 0 and device 1 are both the same? I see no Nvidia device in the output.

I will also try that other thing you suggested after shopping.