We should also just embrace the fact that different programs have different tools. I’ve seen too many times in my tenure here the idea that we should “just combine RawTherapee and darktable” but that isn’t really possible and its nice that different tools work differently and have different features, including RapidRAW. If we had three editors, but they all had the same tools… we wouldn’t have three editors, we’d have one editor three times.

If RR was my software, I would try to write an interface to be compatible with vkdt’s shaders. Maybe throw the already written shader code away and replace it with vkdt’s code if possible.

There is potential for synergies that could benefit both projects. All the complicated stuff could be written once and then used on both sides.

@\bastibe also showed that it is more or less trivial to convert darktable’s algorithms to shaders with the help of a good LLM.

And then he concluded that it was only one step, and much more is needed to create a usable application.

Yes of course. I meant only porting the module algorithms themselves. darktable is a monolith with years of contributions from hundreds of people, it would be a colossal effort to try and reach feature parity on the UI alone. Let alone the pipeline and everything that it has behind that most users never see.

Plus, with all the work from @\hannoschwalm, darktable is only getting faster behind the scenes as time passes.

I’ll be more than happy to help you port some of the shaders from my experimental raw processor to RapidRaw. VKDT’s algorithms are beautifully written GLSL as well.

Dreaming here: it could be amazing with a image shader repository that is shared among applications ![]() Both with different UIs and for programmatic usage.

Both with different UIs and for programmatic usage.

VKDT is basically this, the pipeline is 100% separate from the UI.

Thank you all for the informations. I will take a look at VKDT in the coming days.

In the meantime, I added support (implemented a X-Trans demosaicing algo) for Fuji X-Trans sensores (RAF files): Release RapidRAW v1.3.0 - Fuji RAF X-Trans Support & Small Fixes · CyberTimon/RapidRAW · GitHub

Have fun!

as i understand it wgsl is an additional layer on top of webgpu? so the shader code will not be compatible. vkdt uses glsl 4.6. not sure what the state of webgpu is, but vulkan/glsl allows us to use some of the more interesting gpu features (atomic extensions, tensor cores, raytracing, …). while many of you might not care about ray tracing, atomics are instrumental for fast histograms and some algorithms like bilateral filters, and the tensor cores would enable fast neural denoising for instance.

the shaders/processing modules in vkdt are very much encapsulated and contain no ui code. but all but the most trivial shaders (say the exposure module) will require some sort of command buffer description that tells the host which kernel to execute in which order and how to do the wiring in between (like the align module). i suppose one could link the lib*.so and call into the host side api functions. the easy way would be to link libvkdt.so and use the full graph processing. this also contains no ui code, but it’s a c library, not rust.

you will not get away with “the shader”, vkdt has 197 compute shader kernels and is almost complete for a few use cases. darktable must have a similar number of opencl kernels, but i can’t count that with a simple find | wc -l.

controlling the processing is one thing, at least we can output rgb buffers in display colour space. the other thing is controlling the display itself. with vulkan it’s kinda easy to put it into hdr mode for instance. within browsers this is more difficult and hard to know what’s going on between browser and display.

one more thing i really like is that my images never leave the gpu. that’s where they are processed and then also displayed directly. most gui toolkits have some way of initing their own vk contexts to share a vkImage, again, no idea about webgpu here.

i agree here. vkdt is pretty much just the engine, most of the ui is auto-generated from some text annotations.

Looking at the Cargo.toml file for the RapidRAW backend, I can see the crate that Timon is using is this one GitHub - gfx-rs/wgpu: A cross-platform, safe, pure-Rust graphics API.. Their README says it supports “shaders in WGSL, SPIR-V, and GLSL”

I have no idea of the capabilities of that library though, and if you can get the same level of control you can with the Quake based Vulkan layer used by vkdt. I’ll admit I only understand the theory of what’s happening, not the nitty gritty though.

@CyberTimon does the image data go between the GPU and CPU in between the processing steps or does it stay loaded in GPU memory once its loaded?

The shader code is all in one file, and seems to call all image processing operations in a pipeline from its main function. So it’s all in one go.

Interesting find from another project using Tauri, seems like there is work being done on getting a native app viewport combined with WebView. Their goal is to have the native rendering for the graphically intensive stuff and the UI rendered on top of it using webview.

Parent issue: Tracking Issue: Desktop application · Issue #2535 · GraphiteEditor/Graphite · GitHub

Could be exactly what you guys are looking for?

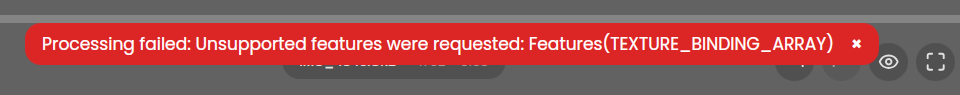

Ubuntu 22.04 when trying to open a raw file (.cr2 Canon 50D) I get this message

processing failed unsupported feature were requested: features(TEXTURE_BINDING_ARRAY)

Cybertimon do you plan on releasing a road map for rapidraw?

In general, I am wondering if separating the processing pipeline and the display/GUI wouldn’t be worth a bit of a slowdown though. Then one could create a client/server model (the server running on the same machine, but also possibly on a different, more powerful one over the network) which would allow working on a puny laptop while offloading the processing to a beefy GPU-equipped desktop. Eg using ZMQ or something similar.

sounds like a nice project, and would be simple to do with a processing library that requires no ui.

this reminds me to do some perf measurements on my telephone (the lowest end device i can imagine i would ever want to work on). in general i feel like performance is way above what’s required for interactive use, more like single digit milliseconds instead of three-digit. for tough cases i’m using adaptive downsampling, where the graph processes a low res image while you are dragging the slider/drawing a brush stroke.

video processing has a lag component of course, which might not profit from client/server (but people who do raw video may want to do that on a good desktop anyways).

also i’m always afraid of overengineering and stacks of bloatware layers of course.

vkdt IIRC already has vestiges of processing/UI separation. You can (could?) already run it as a command-line application, specify a network and input/output images and ta-da, raw processing with no GUI, in 18ms for my Z 6 raws, thank you.

@Tamas_Papp, I’ve thought something similar, a processing server and separate client. Really, any software with a command-line interface could be cobbled together into such an arrangement.

My experience is that compartmentalizing into functional units leads to cleaner code.

Not the case at all for what you are describing and I generally agree with the sentiment but it reminded me of a project I faced when I joined my company.

In this company there was a custom of creating unnecessary layers where they shouldn’t exist, in the eventuality that you would need some services/interfaces in the future to serve different ends of an application.

This was a back-office application and developers sometimes needed more than a day to create a simple CRUD back-office page, due to how insane the boiler plate was. .Net Framework was just pure hell in my opinion. Microsoft and other big names like Uncle Bob influenced developers to come up with these big architectures without thinking once about use case.

The modern version of this is using micro services without their need.

Sorry for the rant, I just remembered it and needed to let it out ![]()