First of all thanks for your contribution.

I don’t remember whether I mentioned it already or not, but anyway: my goal by removing the contribution of the visible image is to obtain a cleaner IR histogram which I can more easily threshold to isolate dust (black spots) and scratches (white streaks) from background. The first experiments I did with GIMP showed that it was often difficult to get all the dust without ending up selecting some parts of the image data bleeded into the IR image.

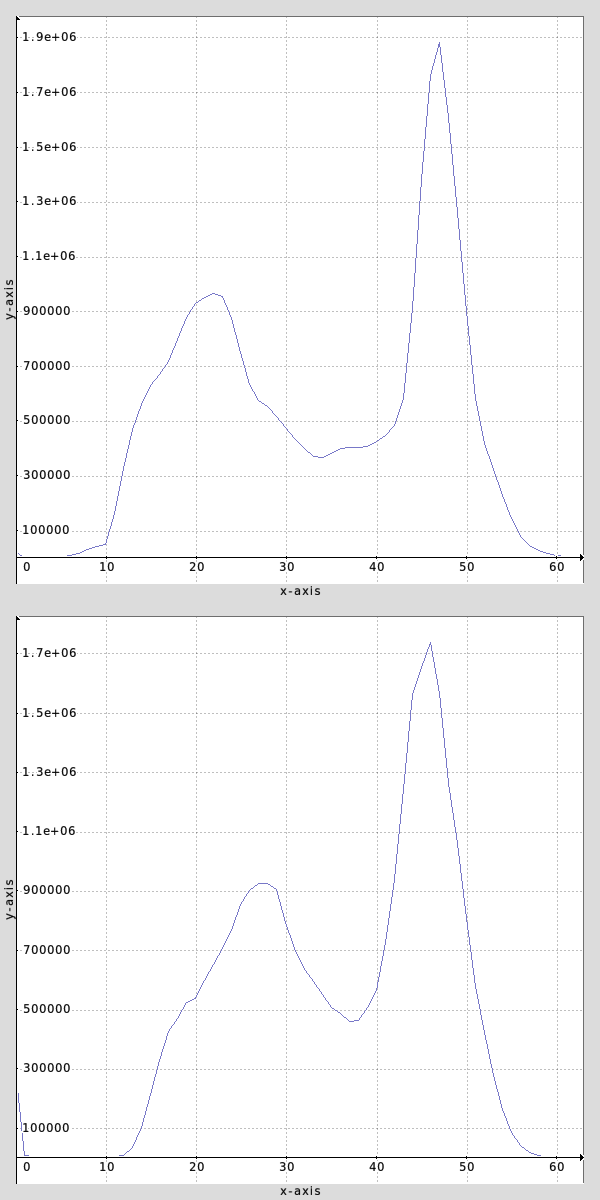

It shows how wide the histogram is if left as-is.

So my goal is not to completely clean it, I only need to increase signal/noise for a simpler threshold detection. Keep in mind that I don’t want to do it one by one manually… I am ready to visually check dust/scratches masks before inpainting, sure, but manual tuning should be kept to a minimum.

The subtraction of a smoothed copy is an idea already proposed, I just haven’t tested it yet.

Threshold by area? what do you mean? manually? I don’t want to

Can you elaborate about the “gamma” test you mentioned?

Also, for info: https://www.hpl.hp.com/techreports/2007/HPL-2007-20.pdf (which was already posted earlier). Given my point clouds I could use the data for interpolation, cutting out the leftmost, non-linear part of the curve. This way I would end up with a very linear correlation, easily removable. It will overestimate contribution in dark areas, but I think that is not an issue.