Update:

I’ve worked hard these last days, trying to improve my CLUT compression algorithm even more. I found a slight improvement, by mixing RBF and PDE reconstruction.

I guess this could interest @hanatos. So I briefly explain the idea here:

Observations:

Basically, what I’ve noticed is that some CLUTs are really well compressed with the RBF reconstruction method (Radial Basis Function), and not so well with the PDE-based method.

But It really doesn’t happen very often. It’s only for a few CLUTS (like <10% or the set of 715 CLUTs I have), that probably have an internal structure where splines can fit the data particularly well

(so, usually, very smooth CLUTs without discontinuities, etc.). Typically CLUTs that looks alike the identity CLUT.

Proposed solution:

So for these CLUTs, the RBF is better, and as in this case, the number of needed keypoints is quite low (generally less than 200), it was a bit a shame to not using RBF for these particular CLUTs.

My proposal, which seems to work reasonably well, is to mix the RBF and the PDE reconstruction of the CLUT, in order to reconstruct a CLUT from its set of colored keypoints, and use this mixed reconstruction method in the compression algorithm I’ve proposed in the paper we wrote (see first post). I’ve tried different ways of mixing the two approaches, and what worked the best is simply a linear mixing:

\text{CLUT}_{mixed} = \alpha\;\text{CLUT}_{RBF} + (1-\alpha)\;\text{CLUT}_{PDE}

\text{where}~\alpha~\text{is the current number of keypoints that represents the CLUT, defined as:}

\alpha = \max(0,\frac{320-N}{320-8})

so it goes from 1 (where N=8, i.e. at the CLUT initialization, to 0 , where N>=320, which is the limit I’ve set. When this limit is reached, the RBF reconstruction is disabled (it takes a lot of time, when the number of keypoints become high).

Results:

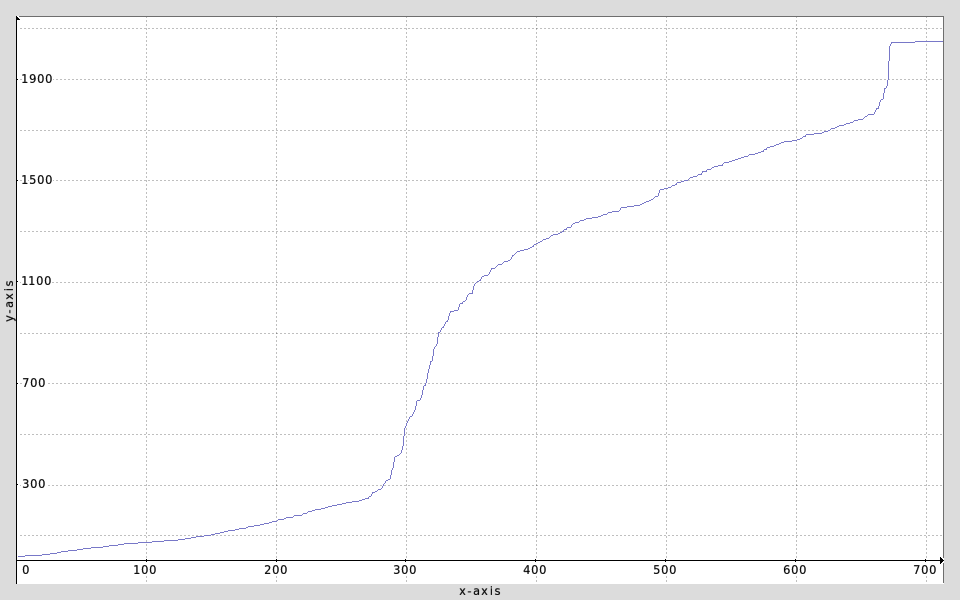

I’ve re-run the compression algorithm for the CLUTs that were using less than 1000 keypoints, and there is a small improvement in the size of the compressed data.

We go from 3115349 bytes to 3019013 bytes, so about 96Kb of saving. That’s not really spectacular, but at least I’ve tried something new

All the experiments I’ve made made me realize that on average, there are not much differences in quality between the RBF and PDE reconstruction. Sometimes the former compresses some CLUTS better, sometimes it’s the latter. That really depends on the CLUT structure. The big difference that counts is on the reconstruction time, where the RBF becomes painfully slow when the number of keypoints increases significantly.

That’s all for tonight

![]() ).

).![]()

like what is the tipping point when the RBF become more expensive or what is their accuracy at same memory usage.

like what is the tipping point when the RBF become more expensive or what is their accuracy at same memory usage.