In fact! dt is taking around three minutes, apparently just doing that.

These log entries are related to 20 images from the same collection, and all of them have local copies.

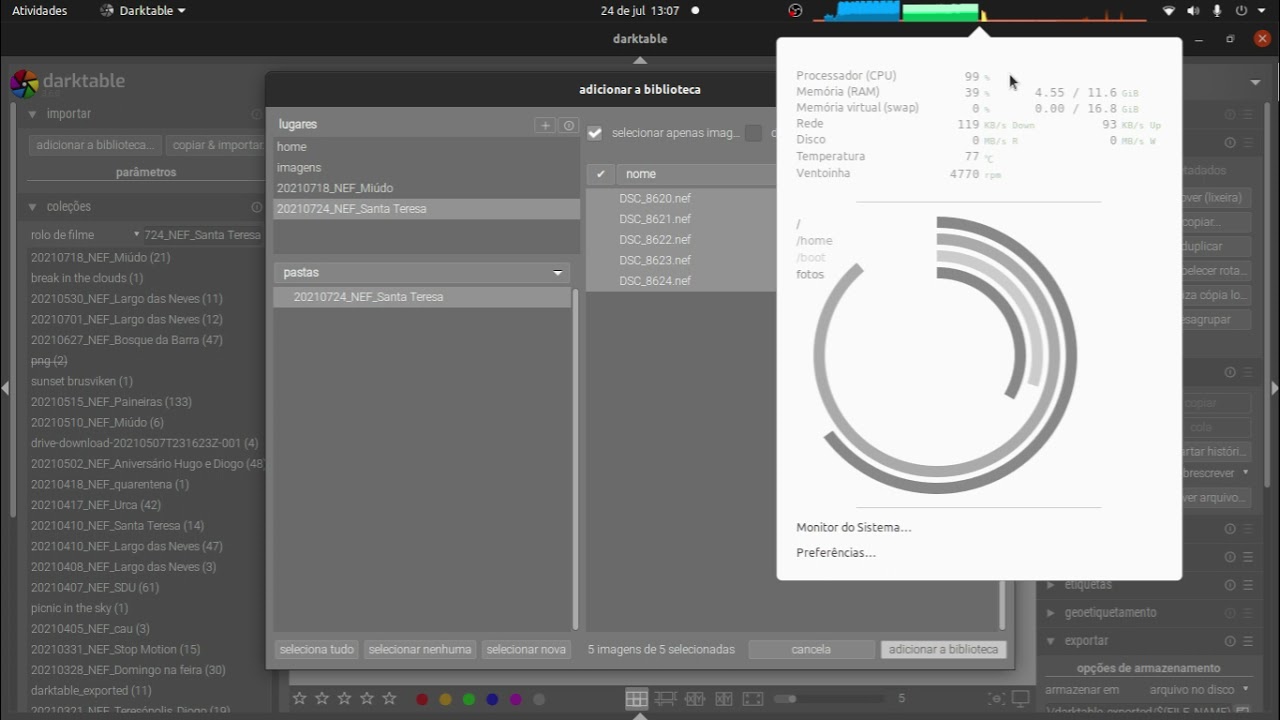

Back into the original database/settings, I synced local copies, removed the 5 nefs from the database, closed it, and restarted it on debug mode.

It seems to have taken more time.

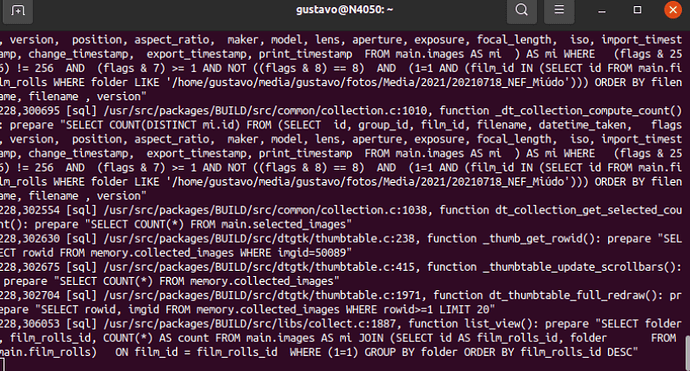

I noticed some offenders:

4,823065 [sql] /usr/src/packages/BUILD/src/libs/collect.c:1887, function list_view(): prepare "SELECT folder, film_rolls_id, COUNT(*) AS count FROM main.images AS mi JOIN (SELECT id AS film_rolls_id, folder FROM main.film_rolls) ON film_id = film_rolls_id WHERE (1=1) GROUP BY folder ORDER BY film_rolls_id DESC"

47,505239 [lib_load_module] loading `copy_history' from /usr/lib/x86_64-linux-gnu/darktable/plugins/lighttable/libcopy_history.so

48,598759 [lighttable] expose took 0,0119 sec

48,672360 [lighttable] expose took 0,0023 sec

48,952859 [lighttable] expose took 0,0033 sec

52,937226 [lighttable] expose took 0,0029 sec

53,252990 [sql] /usr/src/packages/BUILD/src/common/image.c:1662, function dt_images_already_imported(): prepare "SELECT * FROM main.images, main.film_rolls WHERE film_rolls.folder = ?1 AND images.film_id = film_rolls.id AND images.filename = ?2"

53,253772 [sql] /usr/src/packages/BUILD/src/common/image.c:1662, function dt_images_already_imported(): prepare "SELECT * FROM main.images, main.film_rolls WHERE film_rolls.folder = ?1 AND images.film_id = film_rolls.id AND images.filename = ?2"

53,254261 [sql] /usr/src/packages/BUILD/src/common/image.c:1662, function dt_images_already_imported(): prepare "SELECT * FROM main.images, main.film_rolls WHERE film_rolls.folder = ?1 AND images.film_id = film_rolls.id AND images.filename = ?2"

53,255560 [sql] /usr/src/packages/BUILD/src/common/image.c:1662, function dt_images_already_imported(): prepare "SELECT * FROM main.images, main.film_rolls WHERE film_rolls.folder = ?1 AND images.film_id = film_rolls.id AND images.filename = ?2"

53,256841 [sql] /usr/src/packages/BUILD/src/common/image.c:1662, function dt_images_already_imported(): prepare "SELECT * FROM main.images, main.film_rolls WHERE film_rolls.folder = ?1 AND images.film_id = film_rolls.id AND images.filename = ?2"

59,984840 [add_job] 0 | 59,985164 import | queue: 0 | priority: 059,985254

…

62,450221 [sql] /usr/src/packages/BUILD/src/common/history.c:1498, function dt_history_hash_read(): prepare "SELECT basic_hash, auto_hash, current_hash FROM main.history_hash WHERE imgid = ?1"

66,063802 [sql] /usr/src/packages/BUILD/src/common/image.c:2412, function dt_image_write_sidecar_file(): prepare "UPDATE main.images SET write_timestamp = STRFTIME('%s', 'now') WHERE id = ?1"

…

75,941093 [sql] /usr/src/packages/BUILD/src/common/collection.c:172, function dt_collection_memory_update(): prepare "INSERT INTO memory.collected_images (imgid) SELECT DISTINCT mi.id FROM (SELECT id, group_id, film_id, filename, datetime_taken, flags, version, position, aspect_ratio, maker, model, lens, aperture, exposure, focal_length, iso, import_timestamp, change_timestamp, export_timestamp, print_timestamp FROM main.images AS mi ) AS mi WHERE (flags & 256) != 256 AND (1=1 AND (film_id IN (SELECT id FROM main.film_rolls WHERE folder LIKE '/home/gustavo/media/gustavo/fotos/Media/2021/20210724_NEF_Santa Teresa'))) ORDER BY filename, filename , version LIMIT ?1, ?2"

75,943972 [run_job-] 04 336622741,507507 75,944042 import | queue: 0 | priority: 475,944072

83,214949 [sql] /usr/src/packages/BUILD/src/libs/tools/timeline.c:621, function _time_read_bounds_from_collection(): prepare "SELECT db.datetime_taken

…

83,274527 [sql] /usr/src/packages/BUILD/src/libs/collect.c:1887, function list_view(): prepare "SELECT folder, film_rolls_id, COUNT(*) AS count FROM main.images AS mi JOIN (SELECT id AS film_rolls_id, folder FROM main.film_rolls) ON film_id = film_rolls_id WHERE (1=1) GROUP BY folder ORDER BY film_rolls_id DESC"

91,695537 [sql] /usr/src/packages/BUILD/src/libs/tools/timeline.c:621, function _time_read_bounds_from_collection(): prepare "SELECT db.datetime_taken FROM main.images AS db, memory.collected_images AS col WHERE db.id=col.imgid AND LENGTH(db.datetime_taken) = 19 AND db.datetime_taken > '0001:01:01 00:00:00' COLLATE NOCASE ORDER BY db.datetime_taken ASC LIMIT 1"

…

91,703593 [sql] /usr/src/packages/BUILD/src/libs/collect.c:1887, function list_view(): prepare “SELECT folder, film_rolls_id, COUNT() AS count FROM main.images AS mi JOIN (SELECT id AS film_rolls_id, folder FROM main.film_rolls) ON film_id = film_rolls_id WHERE (1=1) GROUP BY folder ORDER BY film_rolls_id DESC"

100,321944 [sql] /usr/src/packages/BUILD/src/libs/tools/timeline.c:621, function _time_read_bounds_from_collection(): prepare “SELECT db.datetime_taken FROM main.images AS db, memory.collected_images AS col WHERE db.id=col.imgid AND LENGTH(db.datetime_taken) = 19 AND db.datetime_taken > ‘0001:01:01 00:00:00’ COLLATE NOCASE ORDER BY db.datetime_taken ASC LIMIT 1”

…

100,330257 [sql] /usr/src/packages/BUILD/src/libs/collect.c:1887, function list_view(): prepare "SELECT folder, film_rolls_id, COUNT() AS count FROM main.images AS mi JOIN (SELECT id AS film_rolls_id, folder FROM main.film_rolls) ON film_id = film_rolls_id WHERE (1=1) GROUP BY folder ORDER BY film_rolls_id DESC”

109,301072 [sql] /usr/src/packages/BUILD/src/libs/tools/timeline.c:621, function _time_read_bounds_from_collection(): prepare “SELECT db.datetime_taken FROM main.images AS db, memory.collected_images AS col WHERE db.id=col.imgid AND LENGTH(db.datetime_taken) = 19 AND db.datetime_taken > ‘0001:01:01 00:00:00’ COLLATE NOCASE ORDER BY db.datetime_taken ASC LIMIT 1”

…

109,313256 [sql] /usr/src/packages/BUILD/src/libs/collect.c:1887, function list_view(): prepare “SELECT folder, film_rolls_id, COUNT(*) AS count FROM main.images AS mi JOIN (SELECT id AS film_rolls_id, folder FROM main.film_rolls) ON film_id = film_rolls_id WHERE (1=1) GROUP BY folder ORDER BY film_rolls_id DESC”

118,554028 [sql] /usr/src/packages/BUILD/src/libs/tools/timeline.c:621, function _time_read_bounds_from_collection(): prepare “SELECT db.datetime_taken FROM main.images AS db, memory.collected_images AS col WHERE db.id=col.imgid AND LENGTH(db.datetime_taken) = 19 AND db.datetime_taken > ‘0001:01:01 00:00:00’ COLLATE NOCASE ORDER BY db.datetime_taken ASC LIMIT 1”

… and so on up to 222,534556

During these longer database transactions, there’s no significant network or cpu activity.

Anyway, thanks for helping me investigate it. I feel more confident analyzing the log now, although I lack the knowledge to understand each step. But, at least, I’m able to identify offenders by looking at the timestamp hops.

Attached, the log from this last session.

dt_debug2.txt (657.6 KB)