Took the time to experiment a bit on the topic. I was especially interested in why both Guided Laplacians and the vkdt implementation showed signs of a magenta cast even for smoothly varying regions where only the green channel was clipped.

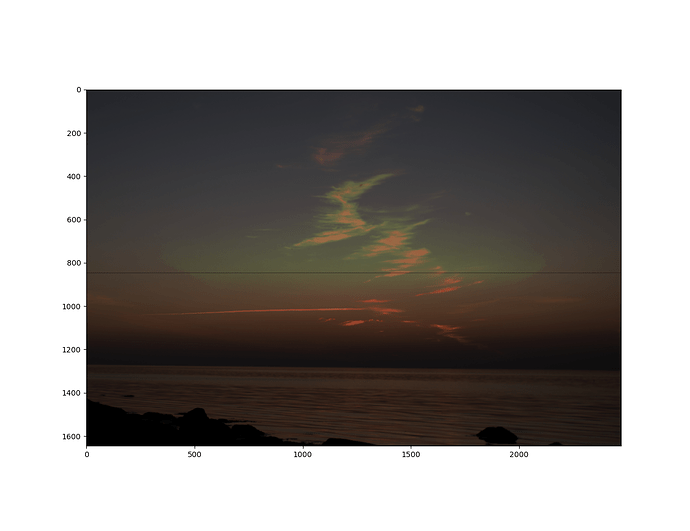

My hypothesis is that they both transfer the linear gradient from the lower unclipped channel to the higher clipped channel without correcting for the magnitude of the signal. I made a simple example using Poisson image reconstruction in one dimension to show the effect. I assume constant color and only an exposure change, more on that later:

The linear gradient transfer is correctly showing the same shape as the lower channel but it is much lower than the actual signal it is supposed to reconstruct. Transfering the logarithmic gradient instead reconstructs the exact same signal as the ground truth when there is no noise. The same result but with small deviations is shown in the case of added uncorrelated noise.

Assume that the high clipped channel is the green channel. Linear gradient transfer would in that case lead to increasing amounts of magenta cast towards the middle of the clipped region. Logarithmic gradient transfer would return an almost perfect result with no color cast.

Why is this the case?

Let us assume that our color is constant within the clipping region. I.e. The RGB values all follow the same exposure function but with a different relation factor or the RGB-ratio is constant.

So, given an exposure function:

Exposure = f(x) \text{ or } f(i, j)

Then our RGB values will be that function times a factor plus uncorrelated noise

R = a \cdot f(x) + n_R \\

G = b \cdot f(x) + n_G \\

B = c \cdot f(x) + n_B \\

It becomes more interesting when we explore the gradient (lets assume there is no noise for now)

\frac{d R}{d x} = a \frac{d}{d x} f(x) \\

\frac{d G}{d x} = b \frac{d}{d x} f(x) \\

\frac{d B}{d x} = c \frac{d}{d x} f(x) \\

So if green is clipped and we want to transfer the gradient, then we can’t do

\frac{d G}{d x} = \frac{d R}{d x}

As those aren’t equal to each other. We could however do

\frac{d G}{d x} = \frac{b}{a}\frac{d R}{d x} = \frac{b}{a} a \frac{d}{d x} f(x) = b \frac{d}{d x} f(x)

But b is not known and would need to be estimated or solved for in some way. There is a special case where a = b = c and that is for whenever the color happens to be white. Thus the usual need to white balance the image before highlight reconstruction (or demosaic for that matter).

An alternative approach, as already hinted about above, is to first take the logarithm (again lets just ignore noise for now)

log(R) = log(a) + log(f(x)) \\

log(G) = log(b) + log(f(x)) \\

log(B) = log(c) + log(f(x)) \\

and then differentiate. Rember that constants such as log(a) have a slope of zero.

\frac{d}{d x} log(R) = \frac{d}{d x} log(f(x)) \\

\frac{d}{d x} log(G) = \frac{d}{d x} log(f(x)) \\

\frac{d}{d x} log(B) = \frac{d}{d x} log(f(x)) \\

All channels now have the same gradient! Which means that they can now be dropped and replaced without any need of white balancing or adjusting for colors (a, b, c differences). And it does indeed work as shown in the presented graph!

Note that low SNR close to zero will yield extremely large gradients, there probably would be a need for some sort of noise floor or noise reduction if this is to be used for real in a highlights reconstruction method.

Anyway, hope people find this little observation interesting.

PS

Poisson image editing as an approach to highlights reconstruction is another interesting topic to dive deeper into in a later post. It opens up for clone tool like transfer of gradients from one part of an image to another as well as placing “color seeds” to force a specific color if the only-exposure-changes-locally assumption fails. I can recommend this simple guide by Eric Arnebäck for anyone who wants to get familiar with the method:

https://erkaman.github.io/posts/poisson_blending.html

The downside is that you need to solve a potentially pretty large matrix inverse which probably is to slow for darktable. That said, there are iterative methods and approximisations that could trade accuracy vs speed.

DS