I have to wonder if there is an algorithm in which sections of color joins other sections of color using the farthest distance away from blurred edge. The farthest color is used as patch color. As soon as it meets the skeleton of blurred edge, it is considered as one patch. Would be perfect for this. I noticed that even my solution has the problem @grosgood and @David_Tschumperle observed though albit to a less extent. Honestly, this seem like research paper material.

I tested my code on David’s image, it didn’t work out. Still just as bad.

EDIT:

The following code allows me to detect blurred edges

rep_norm_difference:

skip ${1=3}

number_of_images={$!}

radius={r=int(abs($1));!(r&1)?++r;r;}

{vector(#2,$radius)},1,2,"begin(

const center_pos=w>>1;

);

[x-center_pos,y-center_pos];

"

resize. 1,{whd},1,100%,-1 ({h})

append[-2,-1] y

eval da_remove(#-1,da_size(#-1)>>1);da_freeze(#-1);

repeat $number_of_images {

{w#0},{h#0},1,1,"

const radius=$radius;

const number_of_offset=radius*radius-1;

const offset_image_position=$number_of_images;

distance=0;

current_color=I#0;

repeat(number_of_offset,ind_pos,

distance+=norm(current_color-J(#0,I[#offset_image_position,ind_pos],0,3));

);

distance;

"

remove[0]

}

remove[0]

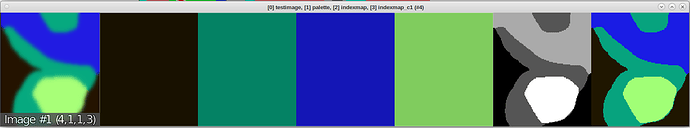

Results here:

So, in conjunction with inpaint,label, and shape_average, and the technique I used earlier, I would consider this solved.

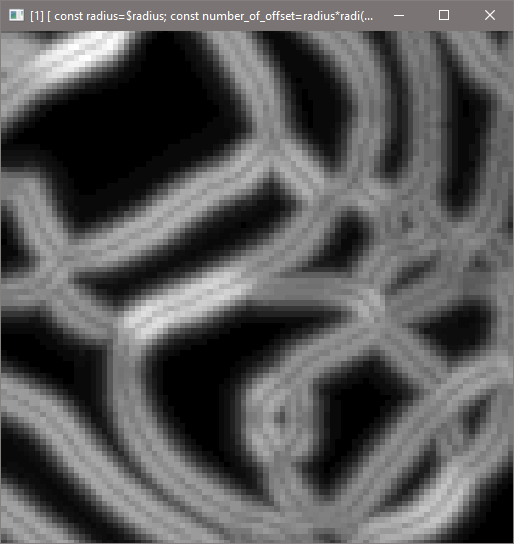

Also, modifying the above code seem to reveal a presence of skeleton:

If one can get that skeleton and label the inside areas, then this thread can be solved.

So, I think it is definitely possible. Computationally intensive though.