So I have a new laptop that I’m preparing for darktable now. OK, it’s not a brand new one, since my budget was somehow limited ![]() It’s an ASUS Zenbook Pro 14 with has some not too bad components stuffed inside that makes me looking forward to get some usable RAW workflow ready.

It’s an ASUS Zenbook Pro 14 with has some not too bad components stuffed inside that makes me looking forward to get some usable RAW workflow ready.

- Intel i5-8265U Whiskey Lake, 8GB RAM

- GeForce GTX 1050 (Max-Q) with 4GB of GDDR5

- Intel UHD 620, well OK

- 512G SSD WDC PC SN520 SDAPNUW-512G-1002

I’m currently using R-Darktable that I built on this laptop under Windows 11 exactly following the description by Aurélien. Basically that build works fine.

But now here goes the problem that makes me really stuck. When it comes to performance tuning (per default, the UHD was used, not the GeForce ![]() ), I followed the instructions from the darktable doc and read tons of very good postings here on the forum (thanks by the way to all involved) to get my setup tuned.

), I followed the instructions from the darktable doc and read tons of very good postings here on the forum (thanks by the way to all involved) to get my setup tuned.

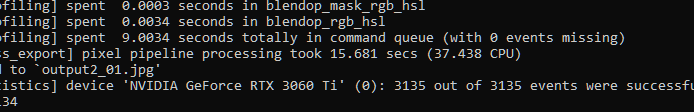

At the moment, I’m really a little lost. With the current parameter settings I have a very acceptable performance (with respect to the system hardware) when exporting RAW to JPEG at full resolution from the lighttable. During export, the GeForce GPU is mostly near 100% and at no time, the CPU is forced to take over the job. Well done.

After being quite happy at this point, I opened the RAW the first time in darkroom. The GUI shows the picture, but also “working” which will never come to an end. In the log (using darktable -d perf -d opencl) I have a repeating list of

[histogram] took 0,000 secs (0,000 CPU) scope draw

but there is no further hint that would help me to get out of this stuck situation. So I’m asking here, if anyone may help me to figure out what I need to further tune on the configuration. Any hints appreciated, also feel free to tell me which information you would need to get more insights. I didn’t want to put all information at hand to this initial post for not overloading it.

Thanks to all in advance - have a great and hopefully peaceful 1st Advent (if applicable to you ![]() ), bye

), bye

Lars.

and no problem, it’s all just OSes

and no problem, it’s all just OSes