HLG is a weird one because it wants to be a hybrid. Don’t actually know exactly how it works. I think it’s basically SDR but just a different gamme curve to handle highlights or something?

HDR10 and the like do basically contain a curve that maps to cd/m2 or other ‘real world brightness information’. Also, the reason why viewing HDR content will see your brightness control being ‘taken over’, you are seeing something that your display is managing to do justice with all it capabilities, so it will crank the brightness.

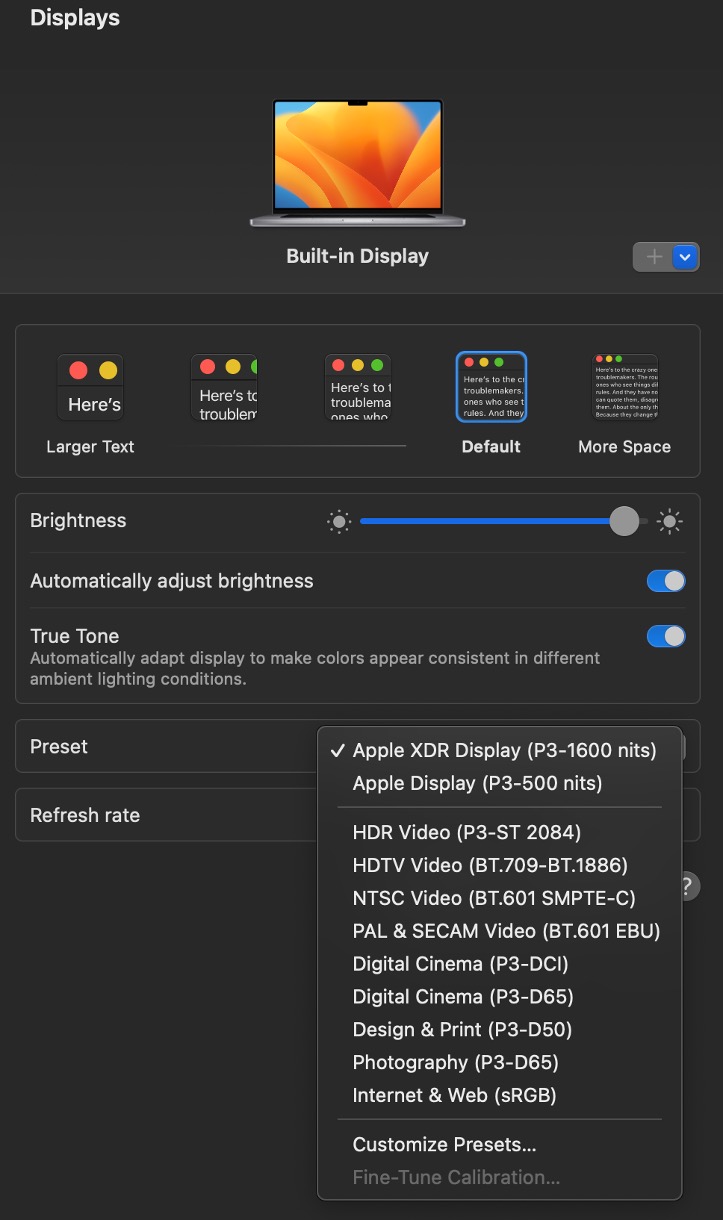

Still, every screen is different, so there is always some sort of tone-mapping happening, but it’s now happening on your screen, instead of earlier in the pipe (the realistic thing is that it’s now happening everywhere of course, it’s not so black-n-white). Your screen knows it can only do 500 nits, for instance, but it will decode the signal (picture / movie) and know ‘this pixel is actually 1000 nits’. Now, how that screen is going to handle a 1000 nit area, knowing it can only do 500 nits, that’s up to your screen. And also why you have good HDR experiences and bad HDR experiences, depending on the screen capabilities but also the screen software :).

The ‘absolute brightness’ I am talking about is not always in the metadata, it’s also in the tone-curves used in each HDR standard. It comes out to almost always the same: The movie player now knows something about the intended brightness of that pixel. While in the SDR area, we only know ‘100%’, which tells us actually very little.

The metadata of some formats help the screens in doing better tone-mapping, by also telling ‘the movie player’ what kind of brightness it can expect. Sometimes static for a whole movie, sometimes dynamic in embedded metadata. But it still stands that the player now knows something about absolute intended brightness, and needs to do some tone-mapping itself (taking into account the hardware capabilities of the screen in question).

This is why AP started thinking about filmic and scene-referred.

If you tone-map early in the pipeline (like all other tools are doing), you are basically making a choice to limit it to a certain absolute brightness, and starting an SDR output, by doing it early in the pipeline. You then add modules / algorithms to tune up that image after it.

If you then want to make an HDR version of it, you basically have to redo the entire edit.

By doing the tone-mapping as last step, and do all other edits on ‘real world brightness information’ (simply explained), that means you can simply tweak the tone-mapping to another target device without having to do the edit again and while preserving the same look.

Since there is no HDR output yet, this is all just theoretical. And also why - what kmilos is saying - Darktable in the end still thinks about 0% and 100%, and the target display cd/m2 value is now a bit of a weird one. It controls the tone-mapping target, but the real-world usage will change I guess once there is an HDR output pipe.