Recently I’ve been investigating the lens correction parameters which are embedded in Sony ARW files. I have had some success with understanding the distortion correction parameters and the vignetting correction parameters. Both define simple splines which give the amount of distortion/vignetting at 16 equi-spaced knots going from the center to the edge of the frame. (See Sony ARW distortion correction, Stannum for further details on the distortion coefficients.)

However, the chromatic aberration correction parameters have me stumped. According to exiftool there are 32 such coefficients each of which is a 16-bit integer. Examples of these coefficients, all extracted from photos taken with a Sony FE 50mm F1.8 lens are:

- F22, 30m focus:

0 128 256 384 384 384 256 256 256 256 128 128 0 0 -128 -384 0 -256 -384 -512 -512 -512 -512 -384 -384 -256 -256 -128 -128 0 128 256 - F7.1, 35m focus:

0 128 256 256 384 384 384 256 256 256 128 128 0 -128 -256 -384 0 -128 -256 -384 -384 -384 -384 -384 -384 -256 -256 -128 0 128 256 384 - F1.8, 1m focus:

-384 -128 0 128 128 0 -128 0 0 0 0 0 0 0 0 0 128 -128 -256 -384 -384 -256 -256 -256 -256 -256 -256 -128 -128 0 0 0 - F2, 0.6m focus:

128 0 0 0 0 0 0 0 0 0 0 0 128 128 128 0 0 -128 -256 -384 -512 -384 -384 -384 -384 -256 -256 -256 -256 -128 -128 0

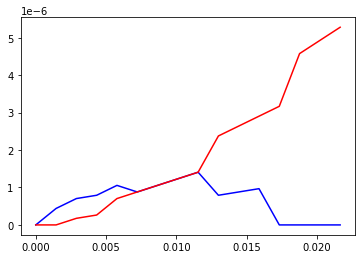

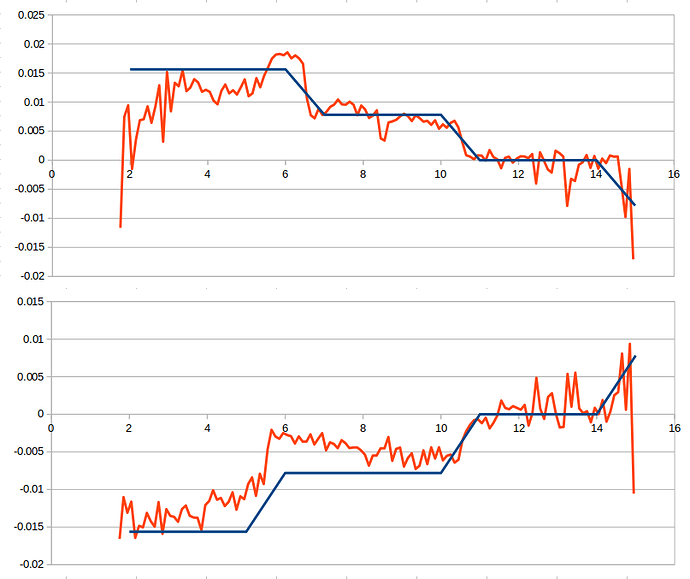

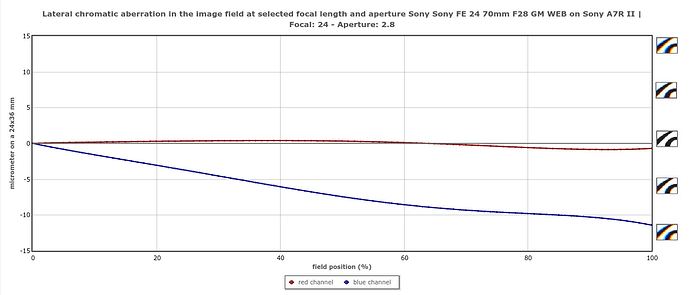

Initially, I thought that these coefficients may define a pair of splines (either concatenated together, one after the other, or with their coefficients interlaced), one describing the shift in the red channel and the other describing the shift in the blue channel. However, inspecting the coefficients quickly throws water on thus, and the resulting curves are not look sensible. This idea is also killed off by the large run of zeros which likely rules out a PCM type of interpretation. I’ve also toyed with the idea of cumulative sums, although the resulting curves did not appear to be physical.

I’ve also considered the possibility that they might be monomial coefficients for a polynomial, however, these polynomials would be exceptionally high order. Even the Adobe CA model as described in http://download.macromedia.com/pub/labs/lensprofile_creator/lensprofile_creator_cameramodel.pdf only uses 12 coefficients whereas we have 32.

As such I was wondering if anyone is aware of any other CA correction models (beyond those used by Adobe and Hugin) which might be being used here?