This is definitely super interesting @liam_collod, thanks for sharing this asset.

I think it is quite a controlled comparison.

Also I watched you video on film inversion with Nuke, very cool! I am especially intrigued by your decision on using the camera color space without conversion. Indeed it sounds a robust way to avoid any negative values that are not physically possible.

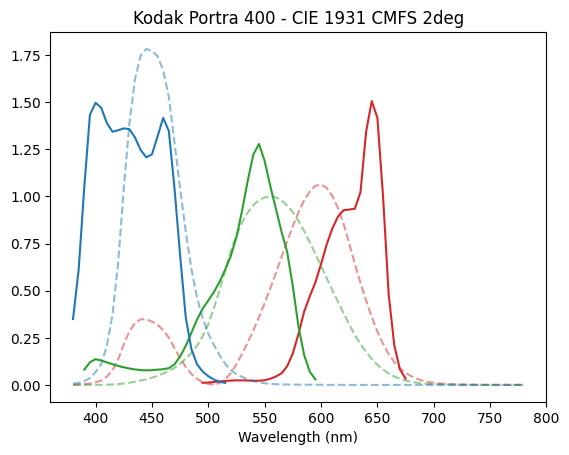

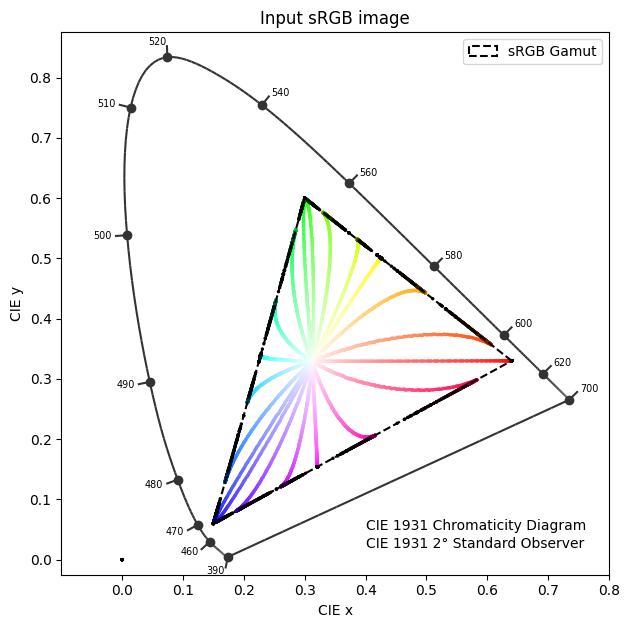

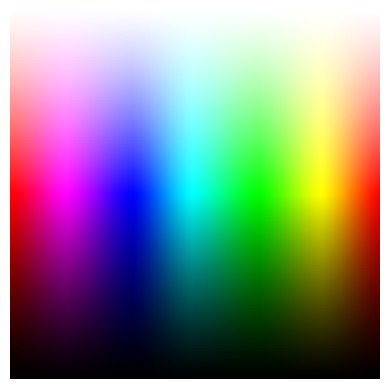

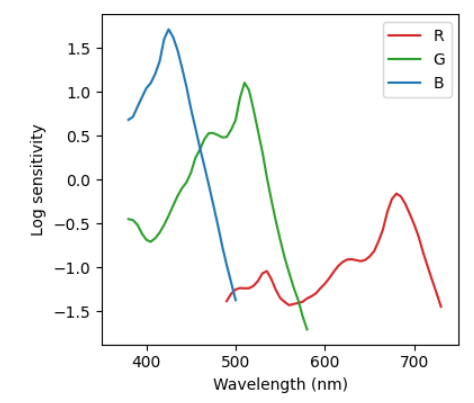

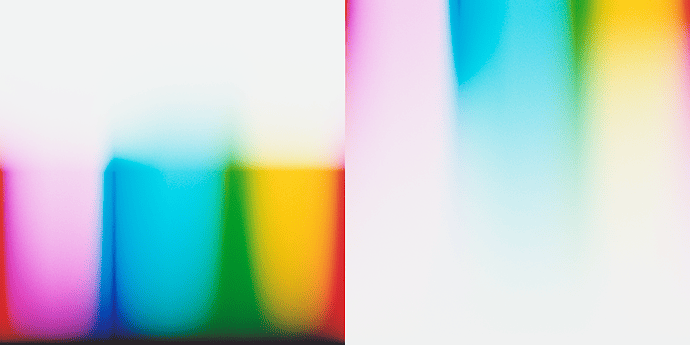

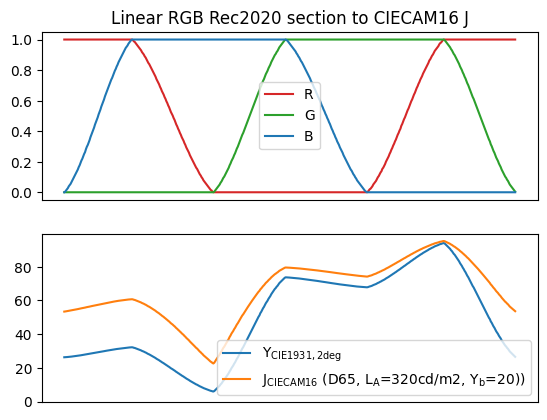

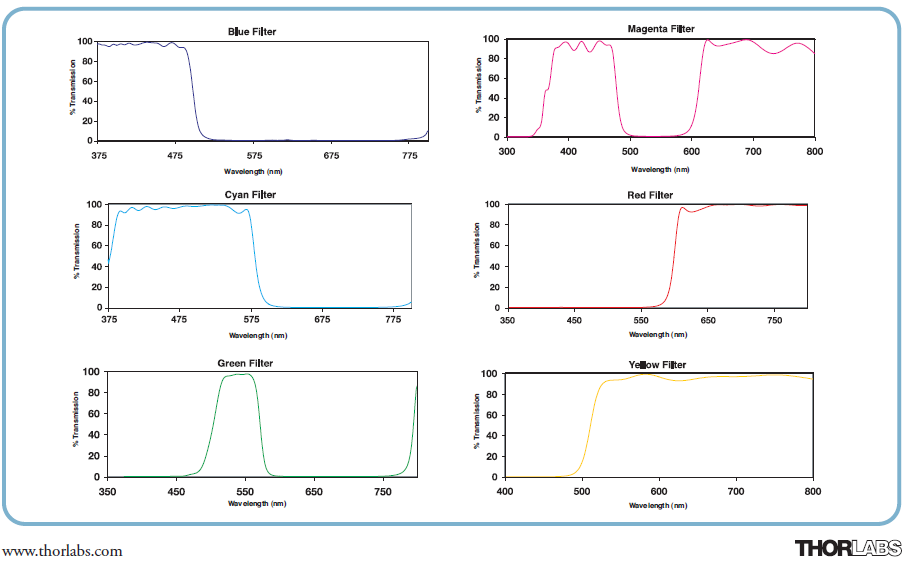

I also notice the cyan explosion in some tests, but still haven’t addressed or pinpointed the root causes. As you demonstrated with your experiment it is most likely relatedto the spectral upsampling of RGB data. From some discussion with @hanatos, I suspect that this is partially related to the fact that upsampling algs are optimized to minimize the errors when XYZ sensitivities are applied. Film negative sensitivities can be quite different from the standard observer. This is for example of Kodak Portra 400:

The film absorbs much wider and with less overlapping sensitivities. My reasonng is that upsampled spectra from RGB do not impose good constraints on the region of the spectrum outside XYZ sensitivities. So the generated spectra might have not reasonable values at the edge of the visible spectrum where film absorbs and eyes do not. But I am not the most knowledgeable on the topic to elaborate deeply on it. I will need to spend some more thoughts on this.

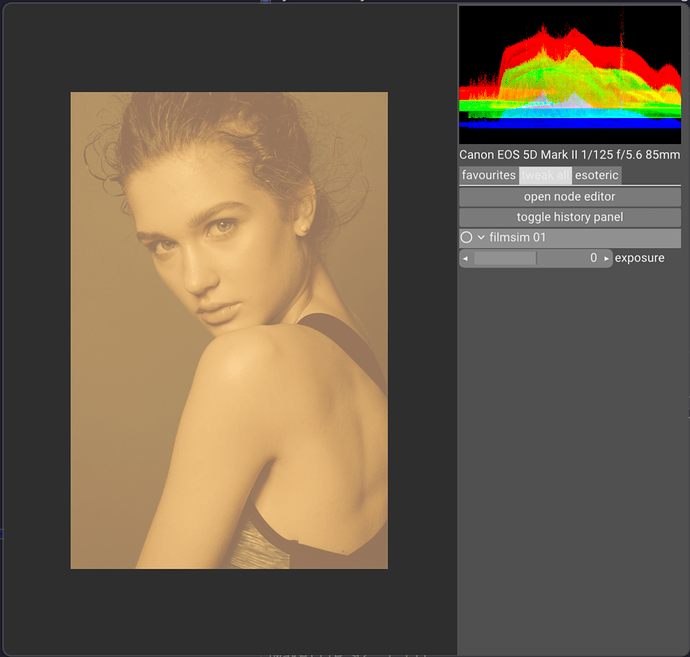

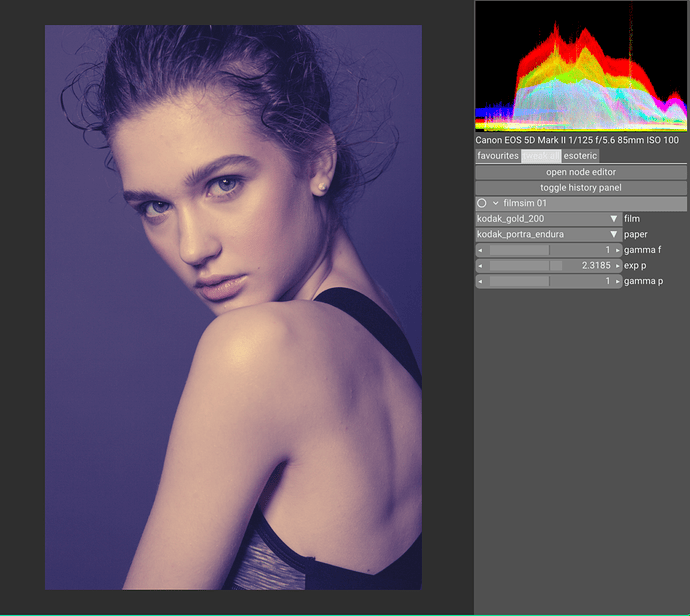

This little experiments, even with all it’s limitations, really tickles my brain, and it will trigger some nice thoughts and discussion I think! Thank you for sharing! ![]()

I would say that the way we decode the image from the raw, and they way it enters the spectral pipeline has a huge impact.