Hi everyone!

A while ago I wrote an Observable notebook where I experimented with variations on Symmetric Nearest Neighbor Smoothing (as well as explain what that filter does to begin with). I thought that the people on this forum might be interested:

https://observablehq.com/@jobleonard/symmetric-nearest-neighbour-all-the-things

(warning: the notebook is pure JavaScript and makes extensive use of worker threads to render the filtered images live on the page, plus the code is also not very optimized. So it will likely slow down the browser tab for a bit while it does a lot of work. Sorry about that  )

)

I’ll give a short summary of how it works. The general concept is very easy to grasp:

- start with a basic box blur

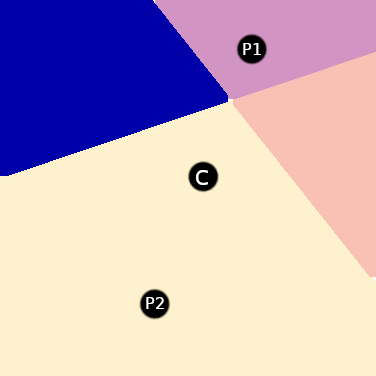

- instead of just averaging all surrounding pixels, select the least different from each symmetric pair:

For center pixel C, P2 is selected and P1 is discarded

- average the selected pixels.

Congrats, you have just implemented a symmetric nearest neighbor smoothing filter!

Example images: (note: the forum seems to show a downscaled version of these image, to really see the differences you might have to open these images in their own tab or save them, and then compare them side-by-side)

Box blur:

“Box blur” SNN Smoothing:

This explanation glosses over quite a few implementation details, like how to determine which pixel is nearest (I ended up using the Kotsarenko-Ramos YIQ color difference metric).

Now here is the fun part: we can easily “remix” this filter, for example using a Gaussian blur instead of a box blur, for slightly improved image quality:

"Gaussian blur SNN Smoothing:

Or how about selecting the most different neighbour instead of the most similar one?

… then using that as the basis for simple edge-detection:

And once we have edge-detection we can build a sharpening filter:

… and finally, we can combine it all together: pick both the nearest and furthest neighbors over a Gaussian kernel, normalize the furthest neighbor by nearest neighbor, average over nearest neighbors, and subtract (normalized) furthest neighbors. The result is a detail-preserving smoothing filter:

(note the dust in the upper-left corner of the tulip picture, and the highlights on the bikes in the kissing photo)

So anyway, after playing around with this for a bit it turned out that this was quite a versatile technique, and I was thinking that the more experienced image processing people here probably can do more interesting things with it than me ;). Hope you enjoyed the brief write-up,

Job