Dear Jaques,

Thank you for your reply giving an overview on noise reduction in RT which is both concise and comprehensive. Don’t worry about your English being bad, your style is actually better than the postings of a lot of native English speakers on the forum.

I know from this and other posts that you are an expert on algorithms for processing pictures, in particular noise reduction, and contributed a lot to RT. So I took my time to draft a reply that can stand up to your comments and contribute to an enlightening discussion. Since my reply will be quite lengthy, I have split it up in 3 parts.

1. What to aim for in noise reduction?

I concur with your statement that reducing noise will inevitably have consequences. One of the basics of information theory is that an algorithm transforming or filtering a signal cannot increase the amount of information in the signal (unless extra information is added). All it can do is redistribute the information. Therefore, a noise reduction algorithm providing an increase in the S/N ratio on the pixel level will inevitably lead to an information loss in another dimension, typically in a spatial dimension, leading to blur.

I also agree with your advice not to try a complete removal of noise, but to aim for an acceptable compromise between noise reduction and retention of picture details. The term „denoising“ is therefore a bit misleading, but I will continue to use it, because it is shorter than „noise reduction“ and used in the RT menus. My personal preference is on keeping detail and accepting more residual noise.

One thing I take away from Emil Martinec’s article on noise is that the major sources of noise in photography are photon shot noise and receptor response variability. Both these noise sources will also be present for human vision. Photon shot noise is the result of statistical fluctuations of the light source, and the photoreceptors in our eye will inevitably have some variability in size and response. If we want to compare our eyes with a camera regarding these noise sources, we have to consider the following facts. Camera sensors typically have pixel sizes of from 2 to 5 µm and a quantum efficiency of about 15 to 30 %. A photoreceptor cone in the fovea (the central region of maximum acuity) of the eye’s retina has a diameter of about 2 µm and cannot have more than 100 % quantum efficiency (This web page gives a comprehensive review on the eye’s physics and physiology). The eye’s pupil has a maximum diameter of 5 to 8 mm which for the eye’s focal length of about 25 mm translates to a maximum aperture of about 3 to 5, less than the apertures used with a DSLR at low light. Therefore, the amount of photons a photoreceptor cone of the eye receives per time unit is pretty much the same as for a typical camera sensor. The human photoreceptor response rate is about 15 per second and therefore the photon shot noise should be comparable to a camera at 1/15 second exposure time. Considering all this, there must be about as much photon shot noise in human vision as in digital photography at comparable exposure times. Nevertheless, we do not perceive noise in our color vision, even at low light, where any DSLR will record strong photon shot noise at 1/15 second exposure. From this I conclude that the human visual system has very efficient signal processing for noise reduction, which we are usually not aware of. However, no matter how effective this processing is, it must inevitable lead to a loss of information for the reason given above. But since we are used to this and unaware of it, we are not sensitive to this particular type of information loss.

If someone finds an error in these considerations which would lead to a different conclusion, then please reply and explain.

When I process a RAW file, I try to get a natural look, i.e. a rendering that is as close to what I perceived at the scene. I think most other users of RT have the same aim. Therefore, when I judge the performance of a noise reduction tool, I will look at how much perceivable degradation it causes. If the tool’s algorithm leads to a loss of information which the human visual system retains in its noise suppression, I will notice an objectionable loss of quality. If the tool’s algorithm sacrifices the same type of information as the human visual system, I will be more satisfied with the result because I cannot notice the information loss.

Therefore, we should aim to design noise reduction tools to mimic the characteristics of the noise suppression of the human visual system. Unfortunately, little seems to be known on how noise suppression is achieved by the human visual system (I have never seen it even mentioned in an article I have read) and as a consequence, we have no mathematical criterion to test whether we achieve such mimicking. All we can do is take a close look at the result and make a visual judgment.

2. Why do I prefer noise reduction with Neat Image (NI) over the current tools of RT?

Before I explain my reasons, I will give a short description on how I use the program. NI is commercial software, but you can download a demo version for trial. I use an old version (6.1 Pro), so you will get a different look with the current demo, but the workflow seems to be unchanged. The makers of NI consider their algorithms proprietary, so we have no algorithm or code for their noise reduction method. However, even from the information NI provides to the user, we we can draw some conclusions on which information is used by the noise reduction algorithm .

Denoising with NI involves the basic steps of loading a picture file, creating or loading a device noise profile, setting parameters for the denoising with control of the effect in a preview window, applying the settings to the entire picture and saving the processed picture. The program can create a noise profile from a sufficiently large featureless area of the picture. However, since many of my photos do not contain large featureless areas, I use the program option of creating noise profiles from a target photographed from a computer screen at different ISO settings. Noise profiles from the target usually have better quality than profiles made from a typical photo and they will give a good match for the noise properties, as long as the picture and the target shot have been processed the same way (NI displays values for the quality of the profile and the matching of noise properties with the loaded picture). I use different sets of device noise profiles processed with the demosaicing methods I use (AMAZE for low ISO and LMMSE for high ISO). NI has an option for loading the best matching profile of this set based on EXIF data and actual match.

The following screenshots show the properties NI displays for a device noise profile and the parameters for the denoising step. The first screenshot shows the data displayed in NI’s profile viewer window. The squares of the frequency components add up to the square of the overall noise level, which indicates that NI may be using a wavelet decomposition for denoising.

The second screenshot shows results of a noise analysis displayed in the window for creating or loading a device noise profile. When you hover the mouse over one of the sliders, NI displays a message saying that the slider adjusts the estimation of noise level in an eighth of the brightness range relative to the rough noise profile. This indicates that NI estimates noise levels for at least 8 brightness ranges and uses their differing values in the denoising algorithm. I do not fiddle with these sliders or apply the Auto fine-tune or Auto complete options, because this usually leads to a decrease in profile quality.

The third screenshot shows part of the window for the noise filter settings with the preview window showing the effect of the current settings. I usually load a preset for these settings which I made and which gives a good result at ISO125. This preset differs from the shown parameters only in a noise reduction amount for Y of 30%. I then check the denoising for different parts of the picture and if I find there is too much residual noise, I increase the noise reduction amount for Y until a further increase causes to much loss of detail. This usually does not take more than 5 minutes. As you can see in the screenshot, all I have to adjust for the photo of the carpet taken at ISO800 is an increase for Y from 30 to 45% and I am done. For more noisy pictures, I may have to increase the noise reduction amount for mid and low by 5 to 10%, but that is all I need to adjust to get good noise reduction with minimal loss of detail.

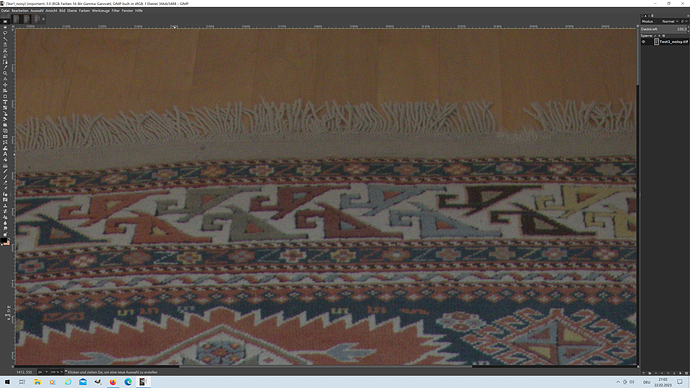

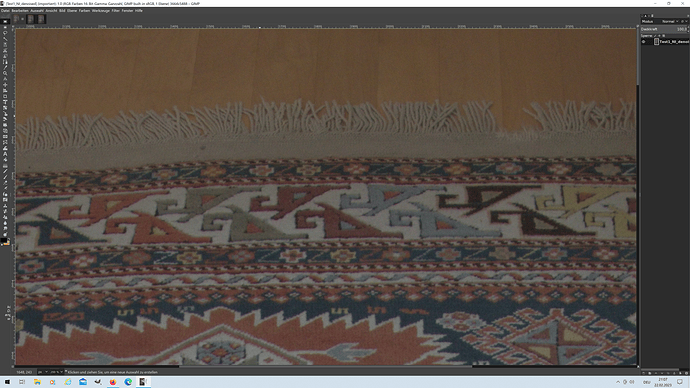

The last two screenshots show the denoising result for my carpet example with NI and a noise reduction amount for Y of 0%, i.e. reducing only chrominance. For both pictures, exposure has been increased by 0.5 EV to get the colors closer to the perceived colors and make the effects of denoising more visible. A direct comparison of the two screenshots shows that for this photo NI can substantially reduce color noise with changes to saturation so small that they are barely visible.

Prior to denoising:

After denoising only for chrominance:

The weak side of NI is processing very noisy pictures. Settings, which do not lead to losing a lot of details, will leave unsightly yellow and blue blotches, in particular in dark regions. I note from the working example in RawPedia for denoising with the local adjustments module that similar, although less prominent blotches remain with the local adjustments denoising tool of RT. There will also be a significant desaturation in NI with very noisy pictures, but it is much less size dependent than I observe with RT, so it can be countered by slightly increasing saturation.

My conclusion therefore is that I can get good denoising results with NI which can hardly be surpassed with the denoising tools of RT. With NI, I get there in a few minutes with few adjustments to one or at most three parameters for almost any picture, wheres in RT I still need to adjust many more parameters, which takes me much longer and often leads to inferior results because I could not get to the best parameter settings. The denoising tool in the details tab is easier to use, but I always get to much desaturation of small color details, no matter of how I set the parameters. My impression is that for the denoising result I get with NI the unavoidable picture degradation is less obvious than for the results I have obtained with the RT tools up to now, so NI gets me closer to my aim for noise reduction.

I think my better results with NI are not due to some magic algorithm of NI, but mainly to the ability of the NI algorithms to use information from a noise profile for adjusting the strength of noise reduction based on signal strength and ISO. Once I have found settings for the noise reduction parameters which give my preferred balance between reducing noise and preserving detail, NI will give me pretty much the same denoising for almost any photo shot with the same camera. All I have to do then are small adjustments to one or two parameters, for which I have a better feel of what I can achieve by changing them than for the parameters I have in RT.

3. My opinions on possible improvements of noise reduction with RT

The following two sections are not meant to be feature requests for RT, but as a basis and starting point for further discussion.

Consider the use of noise profiles

I think that device noise profiles for a camera, obtained from a suitable test picture at different ISO settings, can provide a more even level of residual noise (i.e. less variation in S/N ratio) for a noise reduction tool applied early in the processing pipeline. Darktable is FOSS which uses such noise profiles, so there are people willing to share their knowledge and experience in this area and code to build on. The noise profiles are used in Darktable to derive parameters for a variance stabilization transform (VST) that is used to equalize noise strength prior to applying the denoising algorithm, as described on this web page and in this post. The post mentions that RT uses the gamma parameter of the noise module for a similar transform.

I think that deriving the gamma parameter and a setting for noise strength from a noise profile is not only more convenient than finding out suitable settings for all ISO values by trial and error, but will in general give better results. The reason is that judging a setting for quality of noise removal and quality of detail retention is a task which requires a high level of concentration, which cannot be maintained for more than about 10 to 15 minutes. The more parameters you have to adjust by trial and error, the longer it takes, and as your concentration weakens over time, it gets harder to recognize an improvement and so you get lost and cannot proceed to better settings.

Using a VST with a fitted function may work well for many cameras, but will not be universal, as some cameras appear to change amplification with brightness, which leads to noise profiles that cannot be fitted well. This post shows an example for a Fujifilm X-T3, where for the red and blue channel the upper half of the brightness range cannot be closely fit with the gamma parameter. The use of the gamma parameter will then lead to a stronger denoising for the red and blue channel in this brightness range and an unnecessary loss of detail.

I think a more universal approach could be achieved, if you have a denoising algorithm that can apply a spatially varying strength for noise reduction. You could then create estimated noise strength maps for the three channels from a locally averaged signal strength and the corresponding noise strength of the noise profile and use values from these noise maps to set the noise reduction strength of the algorithm. However, I don’t know whether such a denoising algorithm is available.

Consider denoising RAW data prior to demosaicing

In your reply, you mentioned that the demosaicing algorithm has a strong influence on noise reduction. I have experienced the same for denoising with NI. I get different noise profiles for the same RAW file demosaiced with different algorithms and have to use a noise profiles made with the same demosaicing algorithm to get good denoising. I suspect that the reason for this is that the demosaicing algorithms introduce a local spatial and cross channel correlation which changes the noise characteristics.

As far as I know, most denoising algorithms assume noise to be random with no spatial and cross channel correlation and will give inferior results and artifacts, if such correlation is present. I have noted this for NI, where applying a chromatic abberation correction (which generates cross channel noise correlation) to a noisy picture prior to denoising will lead to much more residual noise towards the edges of the frame. Therefore, I expect that denoising the RAW data prior to demosaicing will give improved results, i.e. less detail loss, for the same amount of noise removal. In addition, this should reduce demosaicing artifacts. If noise profiles are used, only a single profile per ISO setting would be needed and could be used with any demosaicing algorithm.

For Bayer pattern RAW data, this could be done by partitioning the RAW picture by color into four monochromatic pictures, one containing the pixels from odd number rows and columns, one from odd number rows and even number columns, one from even number rows and odd number columns, and one from even number rows and columns. You could then denoise each of these pictures with appropriate settings for each color channel (preferably from noise profiles obtained from RAW data) and reassemble them to a denoised RAW picture with the original Bayer pattern.

Of course, one disadvantage of this approach would be that denoising is in RGB, so you cannot use different denoising strengths for luminance and chrominance. I doubt that is possible to achieve denoising with different strength for luminance and chrominance for RAW data, because physiologically meaningful values for luminance can only be derived after transforming from the device color space to the working color space and applying white balance. Since chrominance noise is more objectionable than luminance noise, it would there be preferable to combine RAW denoising with an additional denoising step to have a first step of denoising prior to demosaicing at a strength, which gives acceptable luminance noise, combined with a second denoising step after demosaicing and white balancing to further reduce chrominance noise.

Another possible disadvantage of denoising prior to demosaicing would arise, if blur introduced by the denoising algorithm would significantly increase with increasing signal strength. This would have the effect that capture sharpening with the RL algorithm would give less sharpening with increasing brightness, because the algorithm assumes a constant blur radius for the point spread function.

Kind regards,

Georg