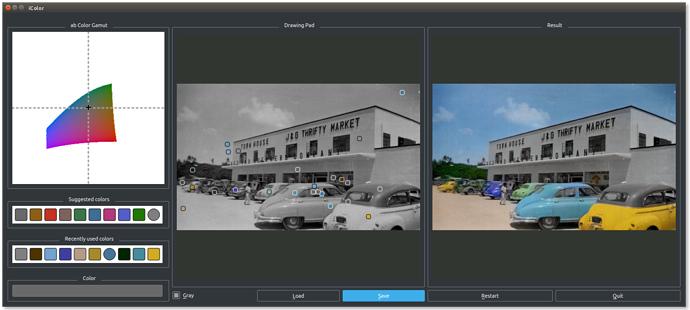

Interactive Deep Colorization in action.

This is a Low-Quality Unprocessed™ scan from an old Brownie photo (a small 1:1 print) my grandfather took in Guam while he was stationed there during WWII.

To invoke I specify the file to avoid wasting time processing the sample image: python2 ideepcolor.py --cpu_mode --image_file ~/Downloads/store.jpeg The program begins by automatically colorizing your image (deep colorization):

As you can see it is well-trained in sky and cloud formations, with a bit of green tinge added to the vegetation.

One goes about clicking at points on the grey image, assigning colors from either a suggested palette or from recently used colors.

Here’s the qt4 UI hard at work.

A hint network is generated and sent off to the caffe-based neural network. During installation of iDeepColor a training model set about 0.5 gigabytes in size is downloaded. Processing (which is done with each color selection, takes about 10 seconds on a single cpu running on a 4GHz i5. Build caffe with openmp to speed this up. Building caffe with CUDA+cuDNN is a good way to enable Nvidia compute 30 and higher devices. I also tried building the opencl branch but have yet to test on an OpenCL gpu.

I didn’t do a very careful job but here is the result anyway:

Moral of the story

Using it is pretty fun: installation not so much; sure, a professional colorizer can perform magic with GIMP, etc, but that’s work. Also it makes you want one of those zillion-CUDA-core GPUs.

So what do you think of the technology? Make your opinion be known. Vote now!

- Bring on the AI

- Let me do it by hand

0 voters

Citation:

Real-Time User-Guided Image Colorization with Learned Deep Priors, Zhang, Richard and Zhu, Jun-Yan and Isola, Phillip and Geng, Xinyang and Lin, Angela S and Yu, Tianhe and Efros, Alexei A

ACM Transactions on Graphics (TOG) vol. 9:4, 2017 ACM

.

.