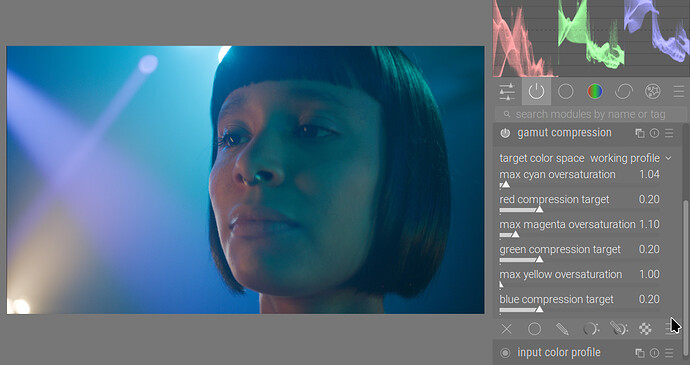

I’ve resurrected the idea of adding @jedsmith’s gamut-compress to darktable, this time not as part of AgX, but as a separate module.

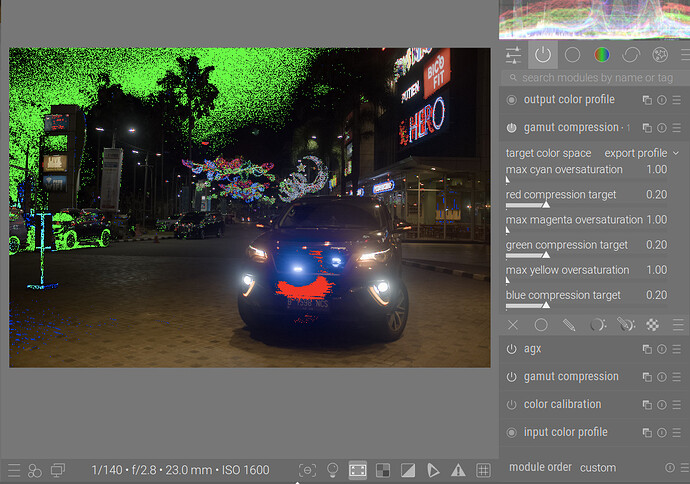

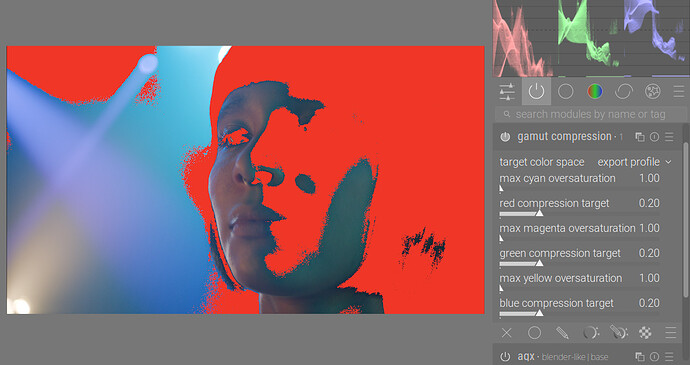

In AgX, out-of-gamut colours are “pushed inside” the gamut: the components are adjusted so no negative value remains. However, as the rest of the colours are not compressed, these “mitigated” colours end up mixing with original colours. Jed’s algorithm gently desaturates highly saturated colours, bringing out-of-gamut colours inside the gamut.

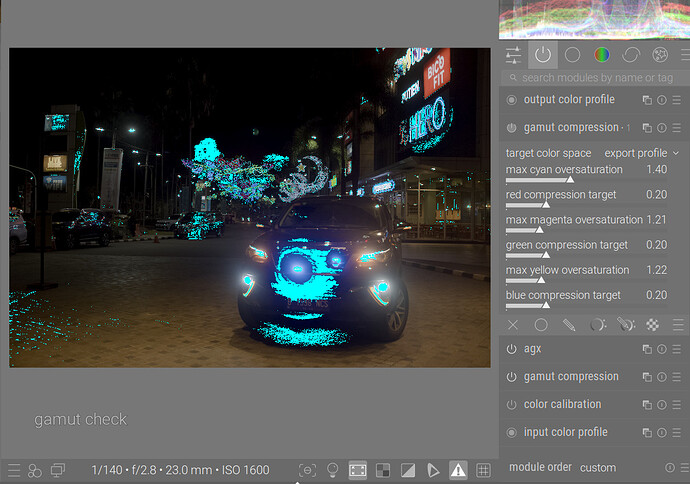

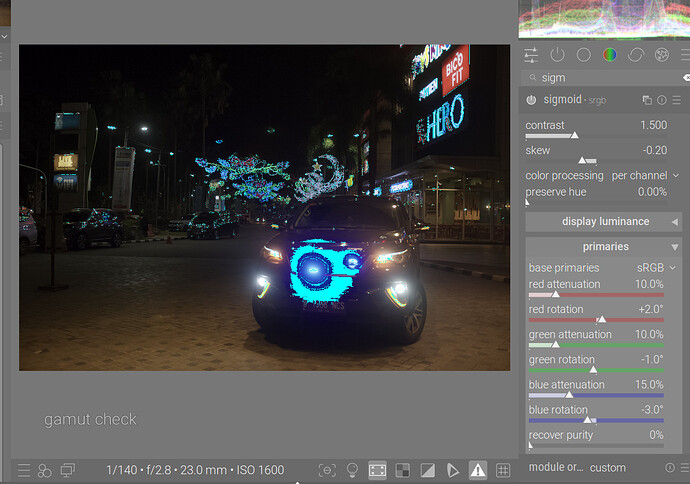

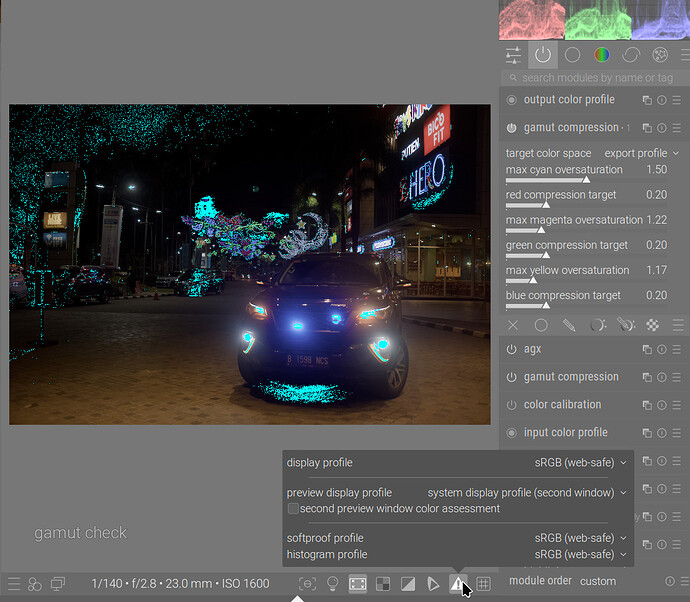

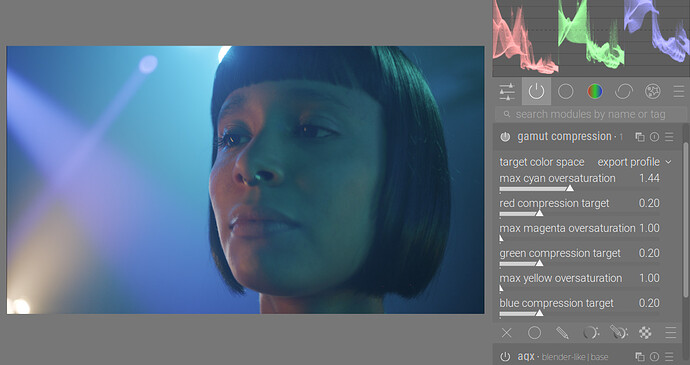

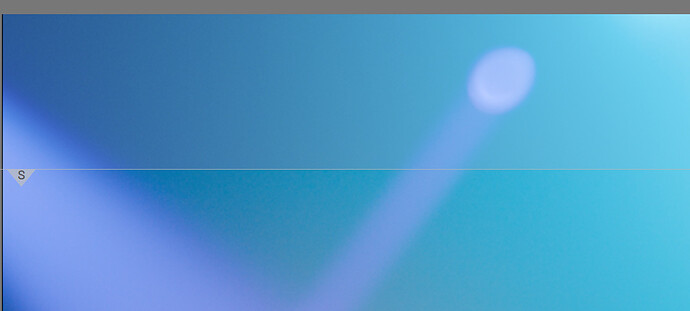

These are agx (with blender-like|base, only with contrast etc. adjusted), and then the same, but gamut compressor before agx, compressing into Rec 2020.

While sigmoid and agx allow manipulation of primaries, e.g. filmic does not. Also, most tools operate on all colours, while this one allows you, on a component-by-component basis, to only compress out-of-gamut and highly saturated colours.

What do you think?