C’est! Everything you’ve said is true—for color reproduction. But scanning film isn’t about reproducing how the negative looks to the human eye/brain. In the world of human vision referred color appearance reproduction there is no difference between a portion of the negative that appears orange because it transmits a specific proportion of 540nm (green) and 640nm (red) wavelengths of light and portion that appears orange because it transmits only 590nm (orange appearing) wavelengths of light, but for scanning one of these is the orange mask, and the other becomes blue in the inverted image.

Here’s an excerpt from the Bible of digital color, Digital Color Management Encoding Solutions by Edward J. Giorgianni, that does a better job explaining it than I can:

In previous system examinations, standard colorimetric measurements were used to quantify the trichromatic characteristics of color stimuli produced by the output media. It was logical to make such measurements because the media involved formed positive images intended for direct viewing. But since negatives are not meant to be viewed directly, it would not seem logical to also measure them using methods based on the responses of a human observer.

If standard CIE colorimetry is ruled out, how else can images on negative films be measured? Is there a different type of “standard observer” that would be more appropriate for negative images? Answering these questions requires a closer look at how photographic negative films are designed and how they are used in practice.

In typical applications, photographic negatives are optically printed onto a second negative-working photographic medium (Figure 8.4). The second medium might be photographic paper, in which case the final image is a reflection print. Negatives also can be printed onto special clear-support films, such as those used to make motion picture projection prints from motion picture negatives. In either case, the resulting print is a directly viewable positive image.

What is important to appreciate is that each photographic negative film is designed to be optically printed onto one or more specific print films or papers, using specific printer light sources. That fact provides the key to making meaningful measurements of color-negative images.

Optical printing is an image-capture process that is quite similar to several others discussed earlier. As shown in Figure 8.5, there is an object (the negative image) that is illuminated (by the printer light source) and “viewed” by an “observer” (in this case, a print medium). The print medium for which the negative film is intended, then, should be considered the “standard observer” for that film, and measurements should be made according to the particular red, green, and blue spectral responsivities of the intended print medium. In other words, measurements should be made based on what the intended print medium will “see” and capture when it “looks” at the illuminated negative in the printer.

Photographic print mediums “see” light very differently from the human eye, and therefore also very differently from digital cameras and profiles, which are designed to mimic human vision.

Here are the spectral sensitivity curves of the human eye:

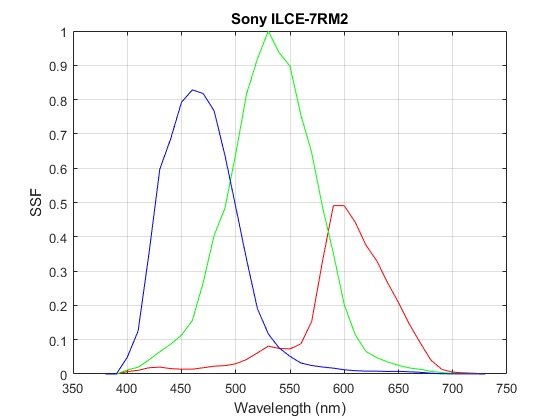

Here are the sensitivity curves for a camera (for those who aren’t already familiar):

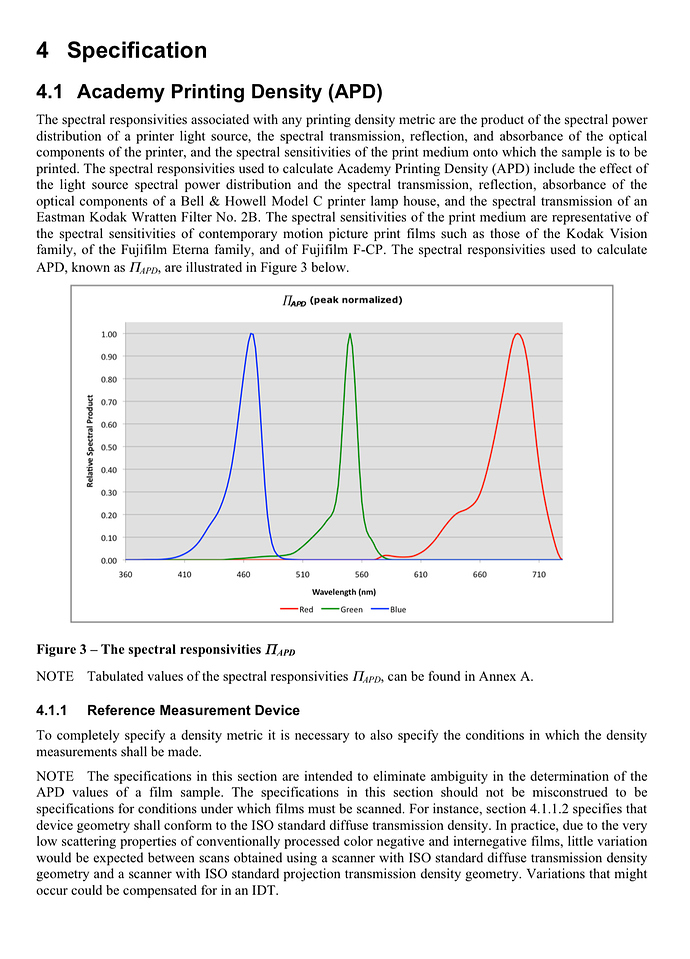

And here are the responsivity curves of the specific combination of light source and print medium sensitivity that film is designed to be “seen” by:

So film is designed to be seen by an “observer” with much more deep red (even near infrared) and much less yellow/orange and cyan sensitivity than the human eye or cameras. Because we can’t change the sensitivity of the sensor we have to use the light source to shape the overall system responsivity to be as similar to that which film is designed to be seen by.

Here are a couple other resources that really helped me start to understand the interplay between light source, negative, sensor, and digital profiling/processing: