This post aims at describing the changes I made since January in darktable for noise reduction.

Context: how does the denoise profiled works

What is the profile?

A profile is a set of a few parameters (2 per channel and per ISO value in our case) that describe the way the noise variance change depending on luminosity.

Variance is a measure of the dispersion of values around the mean: in our case it gives information on how far the values of the noisy pixels can be from the values they should have if there was no noise.

The profile allows to get the variance as a function of the mean.

Why is it useful to have a profile?

Denoising algorithms are usually made with the assumption that variance does not depend on luminosity. Applying them on data where this hypothesis is not verified gives uneven smoothing of the noise. The profile allows to define a transformation on the image, called a variance stabilization transform (VST), that produces an image where the variance of the noise becomes constant: after the tranform the noise in dark and light areas then has the same characteristics, and the denoising algorithm can do its job much more easily.

That’s how denoise profiled works.

What’s new in denoise profiled

We have seen that darktable uses two parameters for each channel and ISO value to perform its variance stabilization transform.

An important change introduced was to change the variance stabilization transform to make it more generic.

The new variance stabilization transform admits a 3rd parameter allowing to control the amount of denoising on shadows highlights, which helps finding a good balance (basically, before the profile allowed to give variance as V(X) = a*E[X]+b, now the variance can be given as V(X) = a*E[X]^c+b).

Ideally, this parameter should be determined during profiling, but for the moment it is automatically inferred with a heuristic from the 2 known parameters of the profile. It can also be modified manually in the interface.

Note that this parameter corresponds to the gamma parameter present in the Rawtherapee noise reduction.

This change in variance stabilization transformation also provided an opportunity to introduce a bias reduction parameter, which corrects the image when shadows turn purple (which happens regularly at high ISOs).

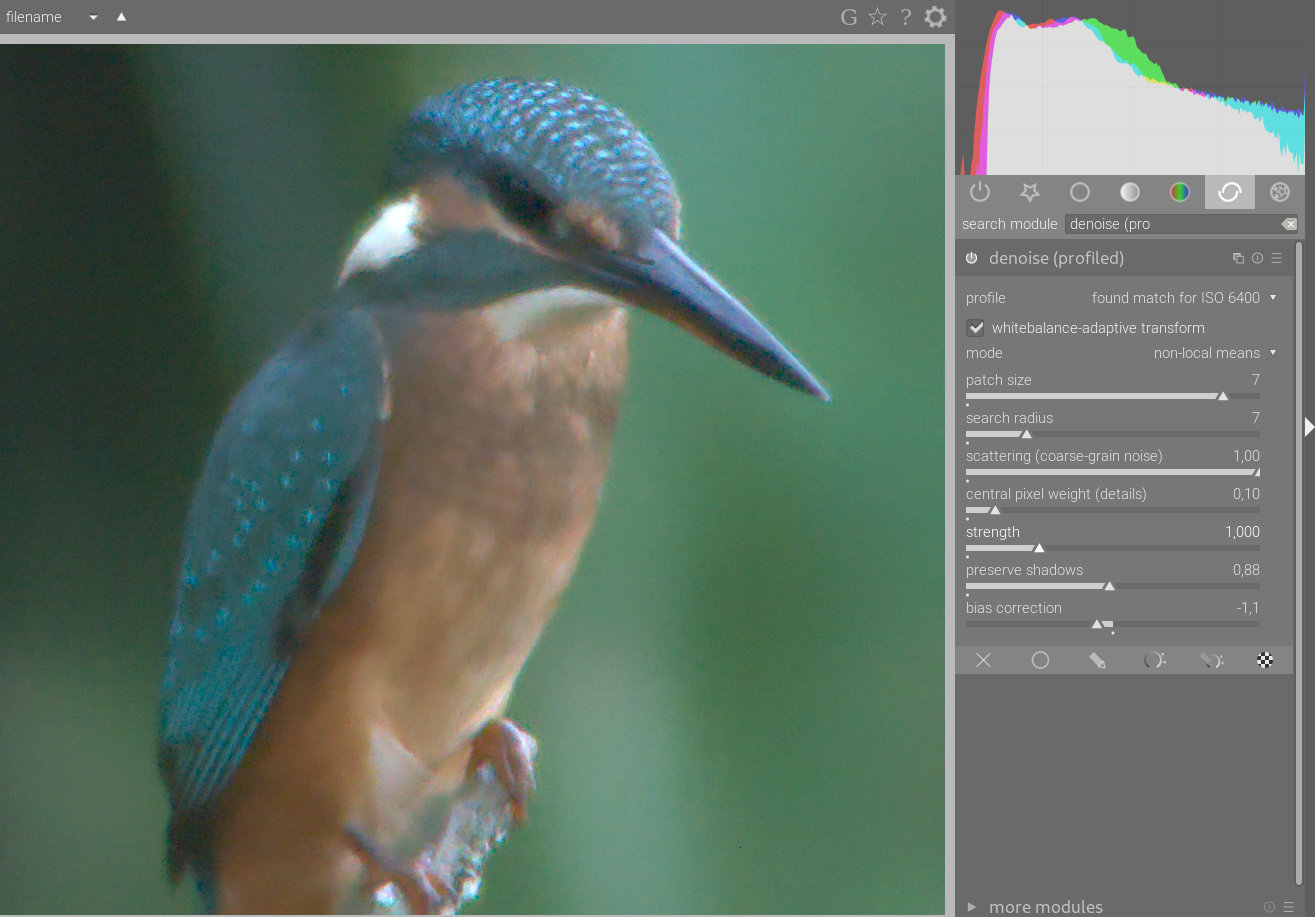

I also added several new parameters into the non-local means algorithm.

I personally found this algorithm usable only in low ISOs.

It only lacked some flexibility!

The first parameter added is the scattering parameter, which allows to effectively reduce coarse-grained noise.

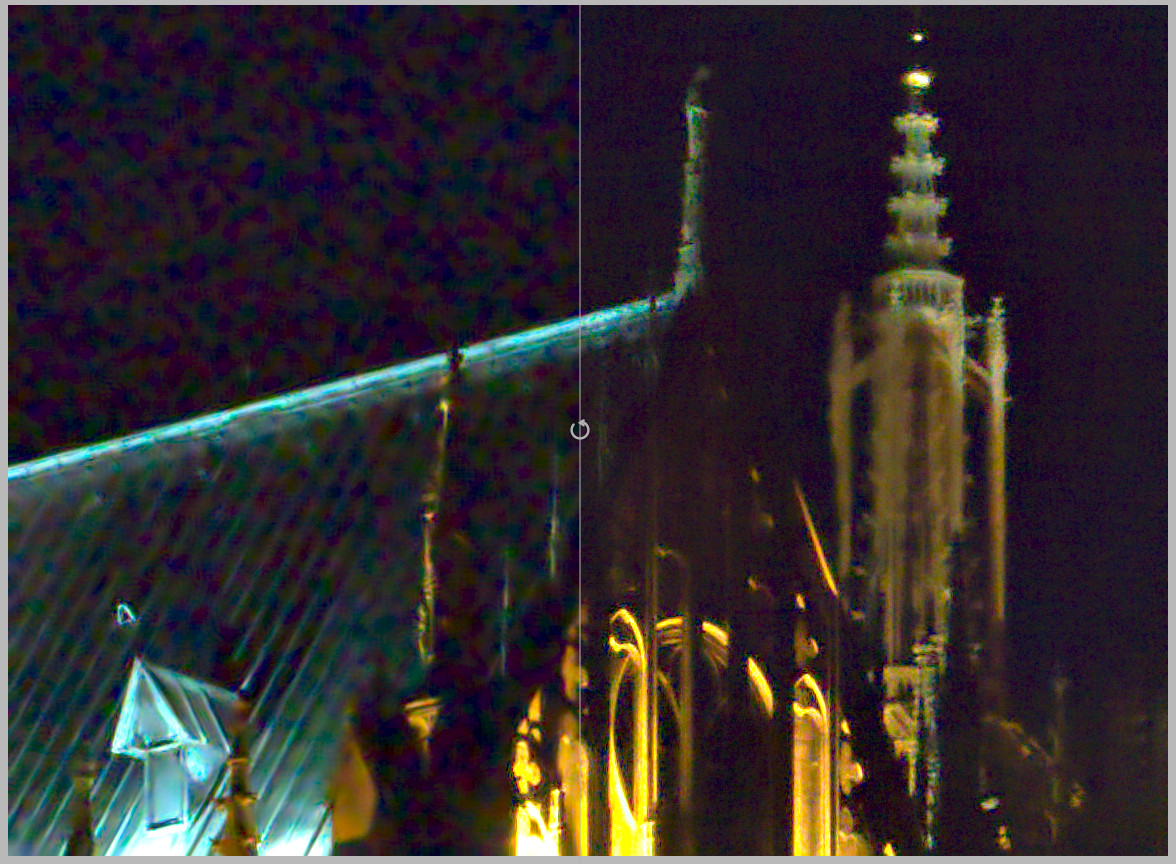

A small example on a picture of jpg54 from darktable.fr, with what we used to get (on the left), and what we can get now (on the right).

We see that the noise reduction on the left creates a very ugly coarse-grained noise.

I then added a parameter called “central pixel weight” which mainly controls the details: it allows to recover details (and fine grain noise). When set to high values, the module mainly reduces chrominance noise. An example, deliberately a little overdone, with no detail recovery at the top, and a strong detail recovery at the bottom (the image has been enlarged to see the effect in the post):

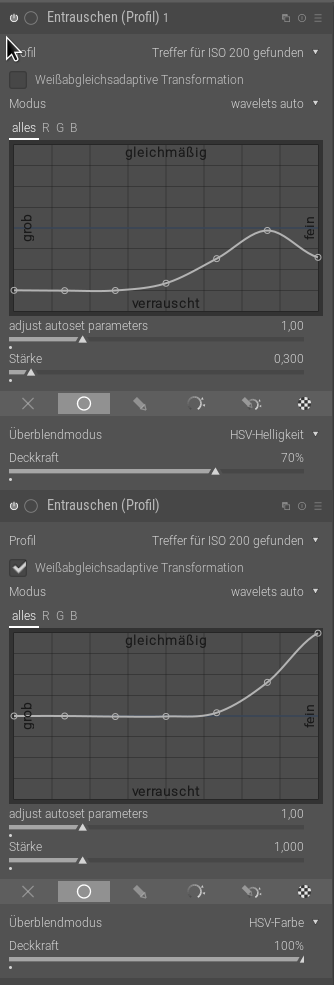

This makes quite a lot of parameters:

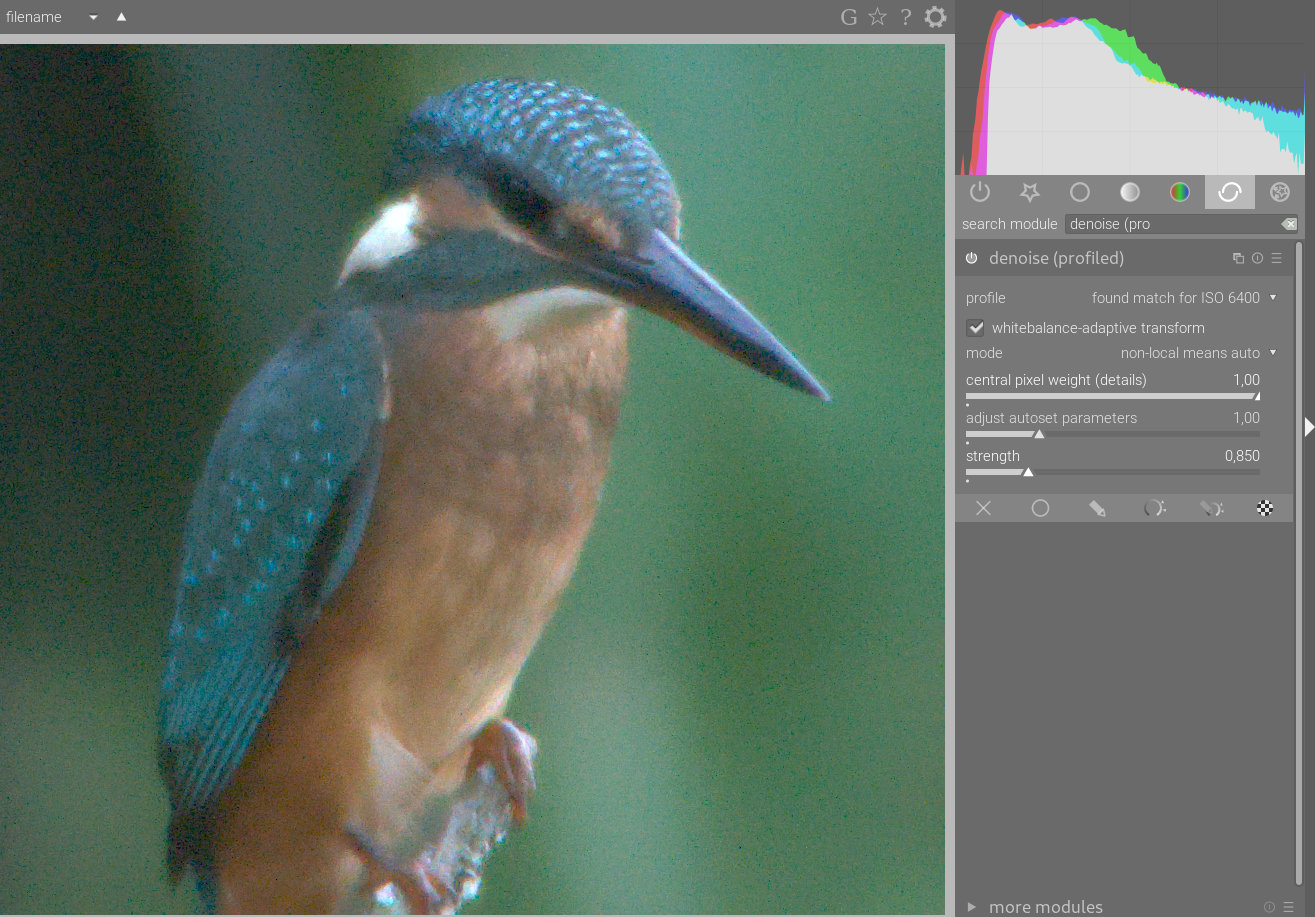

Never mind, I’ve added an “auto” mode, which adjusts the vast majority of parameters on its own based on the profile using some heuristics. And since automatic things are not perfect, this mode has a slider to tweak its settings. Increase the value of this slider if there remains ugly noise (coarse grain noise, chroma noise, huge noise in shadows, etc), reduce it if the local contrast is smoothed too much.

This setting should be used especially if you have under-exposed your image significantly.

If you change the values in this mode and return to a manual mode, the sliders will be updated, as if you had changed them directly.

In addition, the module’s default settings are also automatically adjusted according to the profile, allowing a one-click noise reduction activating the module.

That’s it for the description of the changes, I hope you’ll like it!

Demo:

(the raw is one of my pictures and is available here under CC-by-SA: https://drive.google.com/file/d/1QASzyjnyALEMlyV64NxtIJPtwo7caI53/view?usp=sharing )

without denoising:

with default parameters:

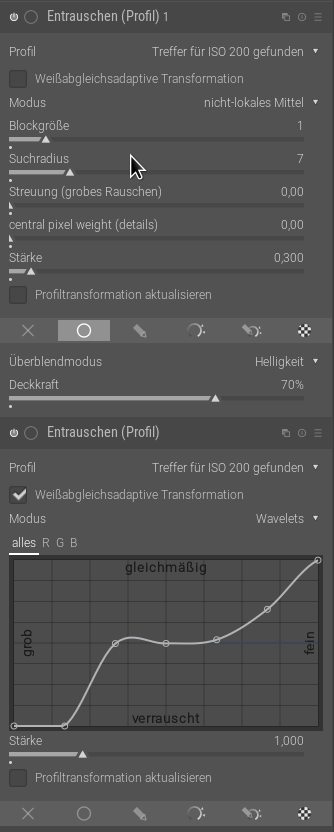

switching to auto mode and finding a better trade-off (one could also have lowered the module’s opacity in this case):

giving a big value to central pixel weight to reduce mostly chroma noise while keeping most of the luma noise: