Hello everyone,

Some of you might have seen a post about my open source project, RapidRAW, a while back. That wasn’t directly from me, and I wanted to wait until the project was in a more polished state before introducing it properly myself.

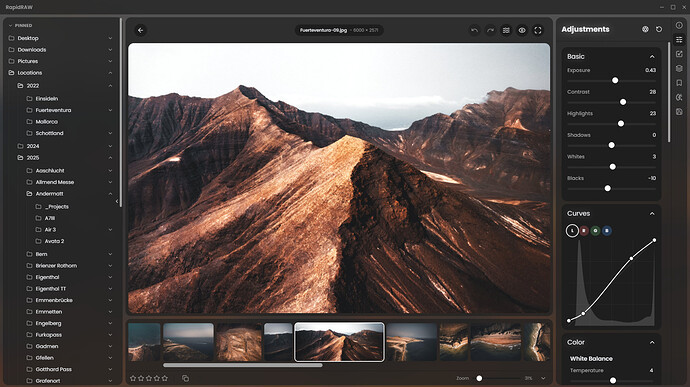

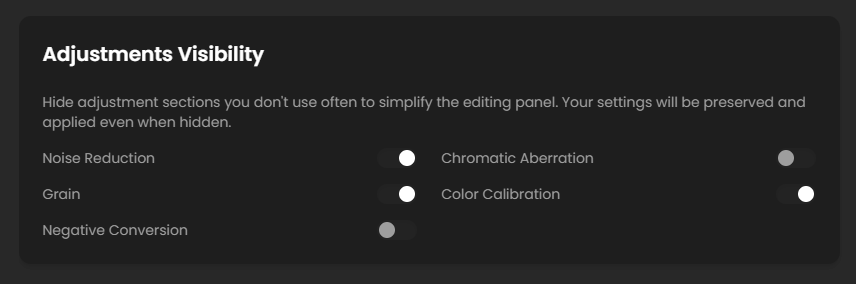

I know this community values powerful, highly configurable tools like darktable and RawTherapee, which offer deep control over the processing pipeline. RapidRAW takes a different approach, prioritizing a user friendly experience with a clean UI and a gentle learning curve. The goal isn’t to expose every possible parameter, but to provide a fast and intuitive workflow.

Core Architecture

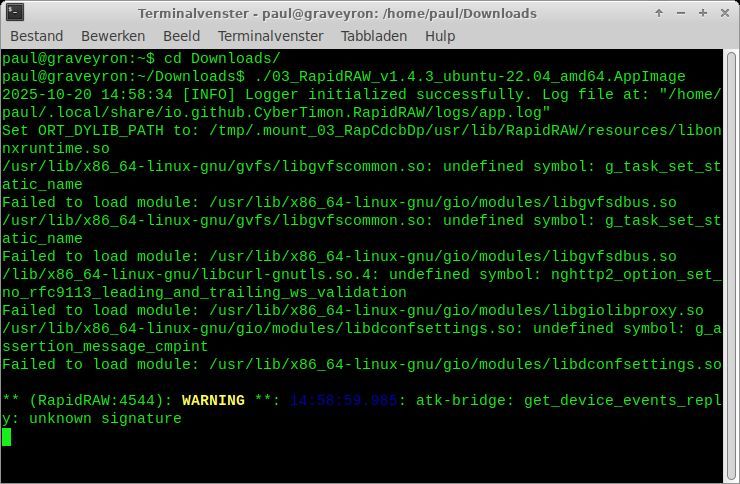

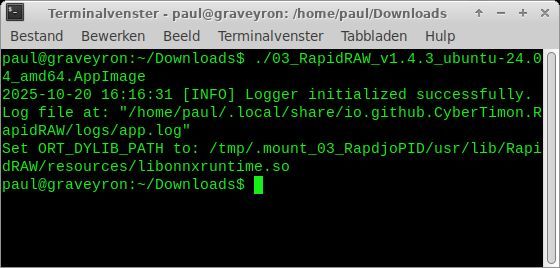

The application is built with a Rust backend and a React/TypeScript frontend, packaged using Tauri to keep it lightweight. The core principle is performance: the entire image processing pipeline is a custom WGSL shader that runs on the GPU. I’ve spent a lot of time recently optimizing this pipeline for better responsiveness.

A common concern with web based frontends is color management. In RapidRAW, all critical image processing is handled in the Rust backend directly on the GPU, ensuring calculations are done in a high precision color space before being displayed.

Recent Developments

The project has evolved quite a bit. Here are some of the more significant recent changes:

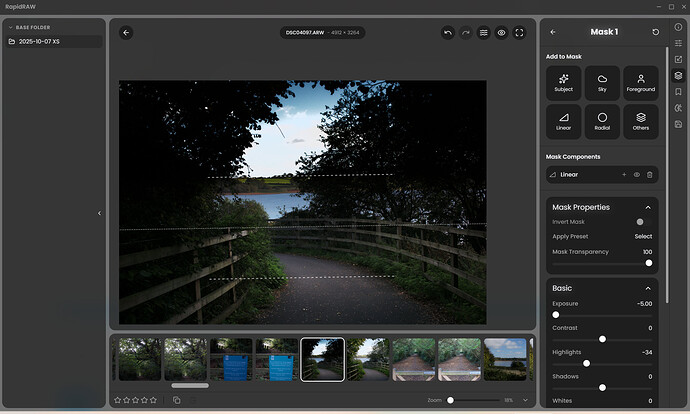

- Advanced Masking: The masking system now includes AI powered subject, sky, and foreground detection (using local models), which can be combined with traditional linear, radial, and brush masks.

- Local AI Integration: For users with capable hardware, RapidRAW can connect to a local ComfyUI server. This allows you to use your own custom Stable Diffusion models and workflows for generative edits.

- New Workflow Tools: I’ve added a panorama stitcher, a collage creator, automatic image culling (blur/duplicate detection), and support for LUTs.

- Creative Adjustments: A range of new creative adjustments and image processing tools have been implemented to provide more finetuned control over the final look.

Current Challenges & Roadmap

I’m actively working on improving the image processing core. One area I’m currently a bit stuck on is finding high-quality X-Trans demosaicing algorithms. The project uses the rawler crate for its base decoding, and I’m actively researching better algorithms to contribute or integrate to improve detail and reduce artifacts for Fuji shooters.

I also anticipate that lens correction will be a common request. Integrating Lensfun is on my roadmap for the near future.

A Question for the Forum

Before I post further, I wanted to check in with the community.

- What’s the general feeling about project update threads like this one? I’m happy to share technical progress if it’s welcome.

- If there’s enough interest, would it be appropriate to request a “RapidRAW” software tag to keep future discussions organized?

The project is fully open source on GitHub. All feedback, bug reports, and technical suggestions are highly appreciated.

Link to GitHub: GitHub - CyberTimon/RapidRAW: A beautiful, non-destructive, and GPU-accelerated RAW image editor built with performance in mind.

Thanks for your time.

- Timon