RawTherapee was born about 20 years ago… Its creator, G. Horwatz, started from scratch and innovated. What he achieved is the result of his knowledge and skills, based on the technology of the time and the methods and tools available in the early days of digital technology. The same analysis could be done for other systems (word processors, the Internet, Darktable, etc.). The process architecture (the four pipelines) and the interface are essentially the same as they were 20 years ago. The tools are presented in the supposedly usual processing order (at the time): the ‘Exposure Tab’ appears first because that’s where the powerful tools of the time were originally located; the ‘Raw Tab’ and ‘Metadata Tab’ are at the end because the user is not expected to (or not often) interact with them.

Innovators play a key role in our societies, whether in our software, our organizations, our communities, our environment, Artificial Intelligence, and more. Flexibility, adaptability, critical thinking, curiosity, the ability to model systems, regulate usage, empathy, and human factors are essential for survival. These innovators, these creators, are often misunderstood and undervalued; they are sometimes perceived as discourteous and somewhat outside the system. But the essential thing is to understand the system and evolve its goals, issues (what is at stake), values, identities, and cultures.

After this brief introduction (which is the crux of the matter), let’s return to software like Rawtherapee, ART, Darktable, etc. I won’t make a direct comparison, as that would be pointless, but I will try to highlight some key points. It’s not about being for or against, or saying one is better or worse, but about understanding:

-

Compared to Rawtherapee, ART has undergone a slimming down, incorporating innovations (CTL, etc.) focused on the user. I admire its creator. Broadly speaking, ART is still similar to Rawtherapee, with some advantages and disadvantages (in terms of features).

-

Darktable, thanks to the initiative of a few innovators whom I respect and admire, has introduced and adapted significant innovations. This leads to a new way of seeing things, and therefore, of doing them. In particular, the concepts of ‘Scene Referred’, ‘Display Referred’, and innovative tools like ‘Filmic’ or ‘Sigmoid’ (and soon ‘Agx’) have been added and explained… a strong communication strategy has been implemented. The general idea is to perform the majority of the processing in linear mode (what exactly is that?) – demosaicing, working profile, etc. – and then to ‘input’ and adjust the data at the end of the process to what is perceived by the user, on the available media (screens, printers, etc.) using tools such as ‘Tone Mapping’ : like ‘Filmic’ or ‘Sigmoid’ combined with ‘Color Balance’, etc.

-

Rawtherapee is a system equipped with tools that are (virtually) nowhere else seen (innovations), apart from a few recent adaptations. I’ve heard comments and criticisms on the web, in the specialized press… (people don’t understand anything, what’s the point, it’s engineering stuff…). A good part of this stems from a lack of understanding, an impression of complexity, or simply a lack of knowledge, which I think is mainly due to a lack of communication and exchange (support materials, forums, videos, etc.) and the habits of users and designers. What about: CIECAM, Wavelets (yes, it’s simpler in GIMP, but that’s comparing apples and oranges), White Balance Auto, Selective Editing (yes, it’s different from masks, and on closer inspection, more intuitive, I stand by that), Capture Sharpening, Dual Demosaic, Color Propagation, Abstract Profile, etc.? Several highly competent people have contributed to what RT is today.

‘Game changer’ - in French, the term ‘bouleverseur’ suits me well as a translation: it aims to change the usual way of thinking and acting in terms of image processing. Before changing the way we do things, we must first agree on the way we see things. This is partly the purpose of these presentations / discussions.

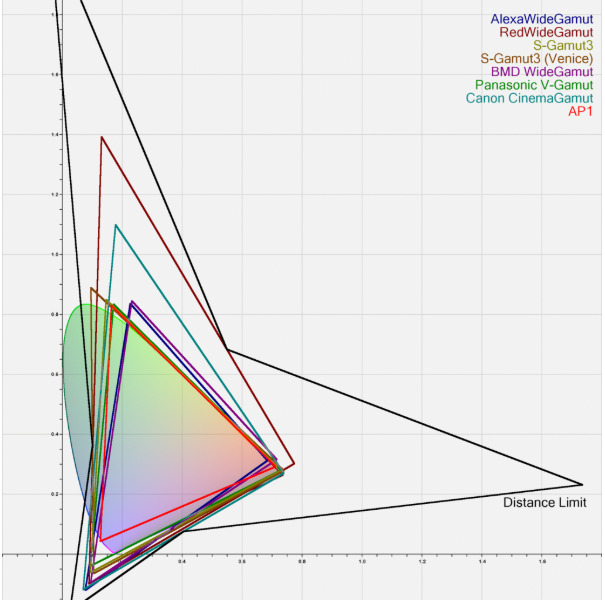

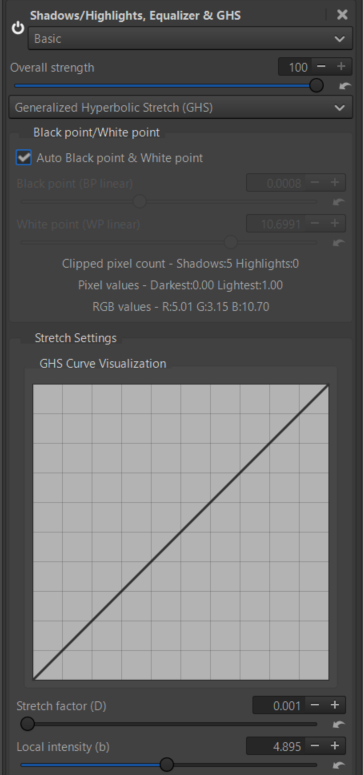

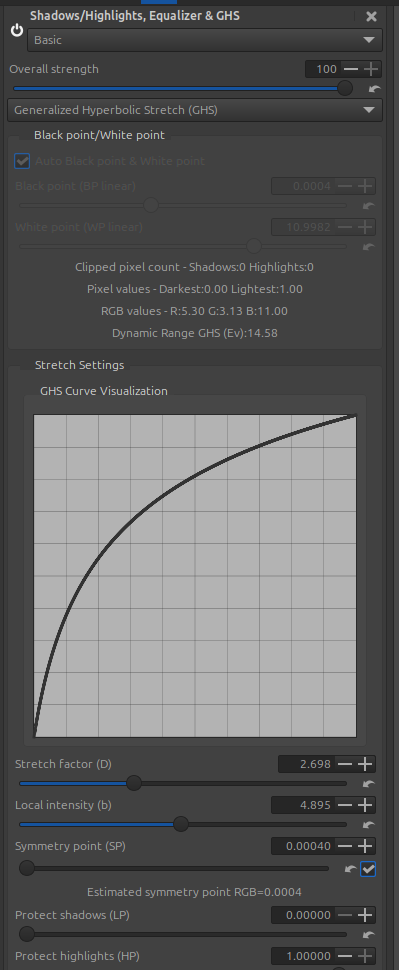

I will open separate threads (if my health permits), step by step, which will cover the new features and integrate them into the current Rawtherapee system (GHS, Capture Sharpening, Gamut compression, Selective Editing, Abstract profile, etc.):

-

Perhaps the Rawtherapee GUI interface will need to be updated; this is the purpose of the Pull Request ‘rtreview’. Your suggestions are welcome. Not everything is feasible.

-

It would probably be necessary to change the order of actions in the process, but this operation is extremely complex (the order of ‘events’ that places one action before another, and which has nothing to do with the GUI interface, for example GHS should be further upstream, or other processes located later ). I’m answering in advance the question of the differences between Preview and Output, and the malfunctions of Preview… I don’t know how to do it.

I’m going to focus primarily on the ‘why?’ rather than the ‘how?’ Why GHS, rather than something else? Why integrate noise reduction into Capture Sharpening? What’s the advantage of linear White Points and Black Points rather than those expressed in Ev? Etc.

The settings provided with the examples (pp3) don’t represent the ideal, perfect processing, but rather the path to understanding how and why to do it. Of course, they are indicative, simplified as much as possible for educational purposes, and can (and should) be expanded upon.

The limitations of Pixls.us only allow me to post two consecutively if there are no other comments. Therefore, each post is likely to be rather long.

The lack of up-to-date and accessible documentation will be a significant obstacle. I’ll have to copy paragraphs to Pixls.us, which will make it somewhat cumbersome. Conversely, these exchanges will allow me to put the examples online.

If a user of another software (ART, Darktable, etc.) wishes to explain how this type of processing is done in their environment, they may do so, but only in a very short post of one or two lines (no more), linking, for example, to Playraw. Otherwise, the original discussion will be diverted, the moderator will be notified, and the post will be deleted.

I hope the translator won’t distort my words.

Thank you.

Jacques